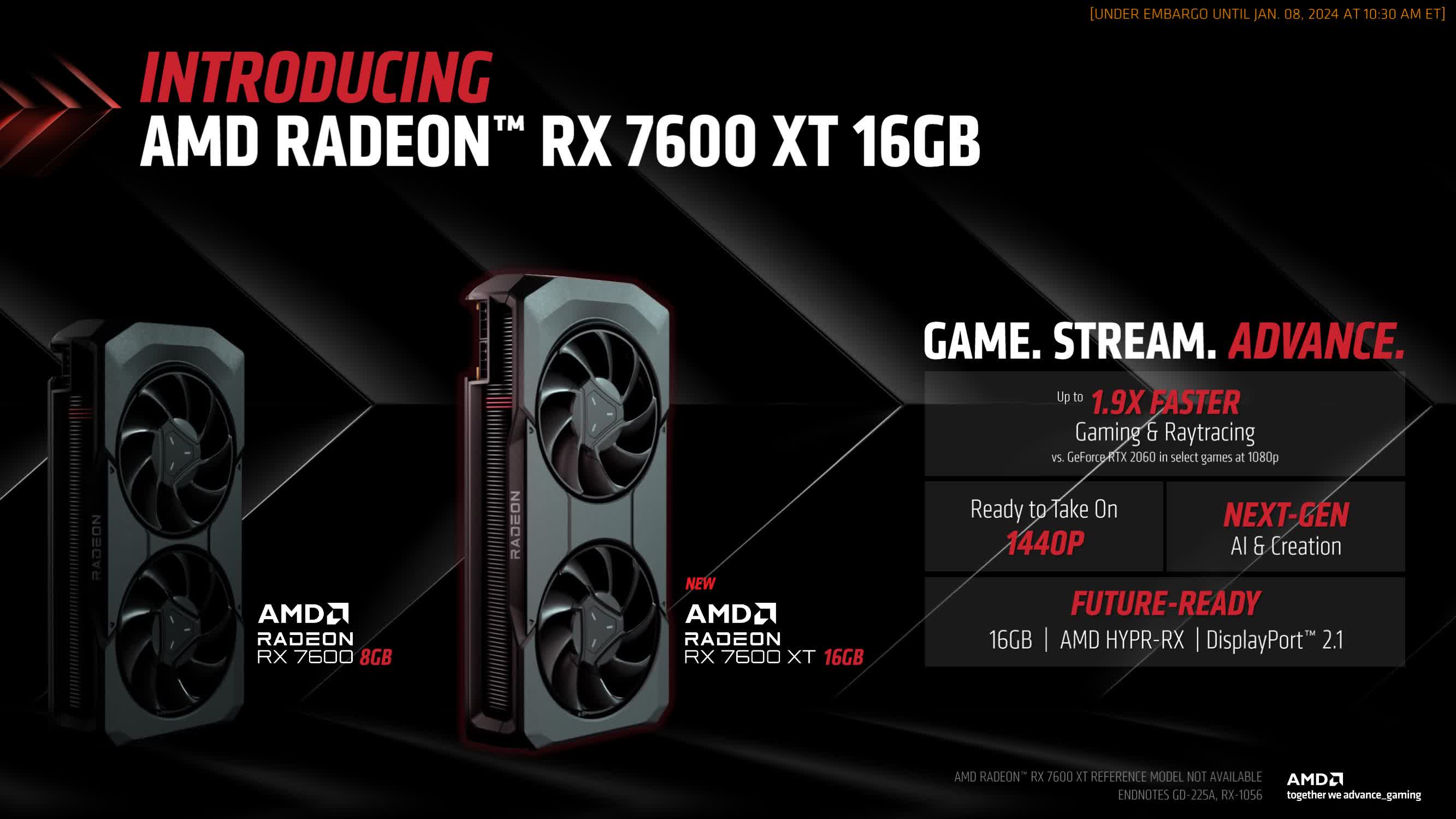

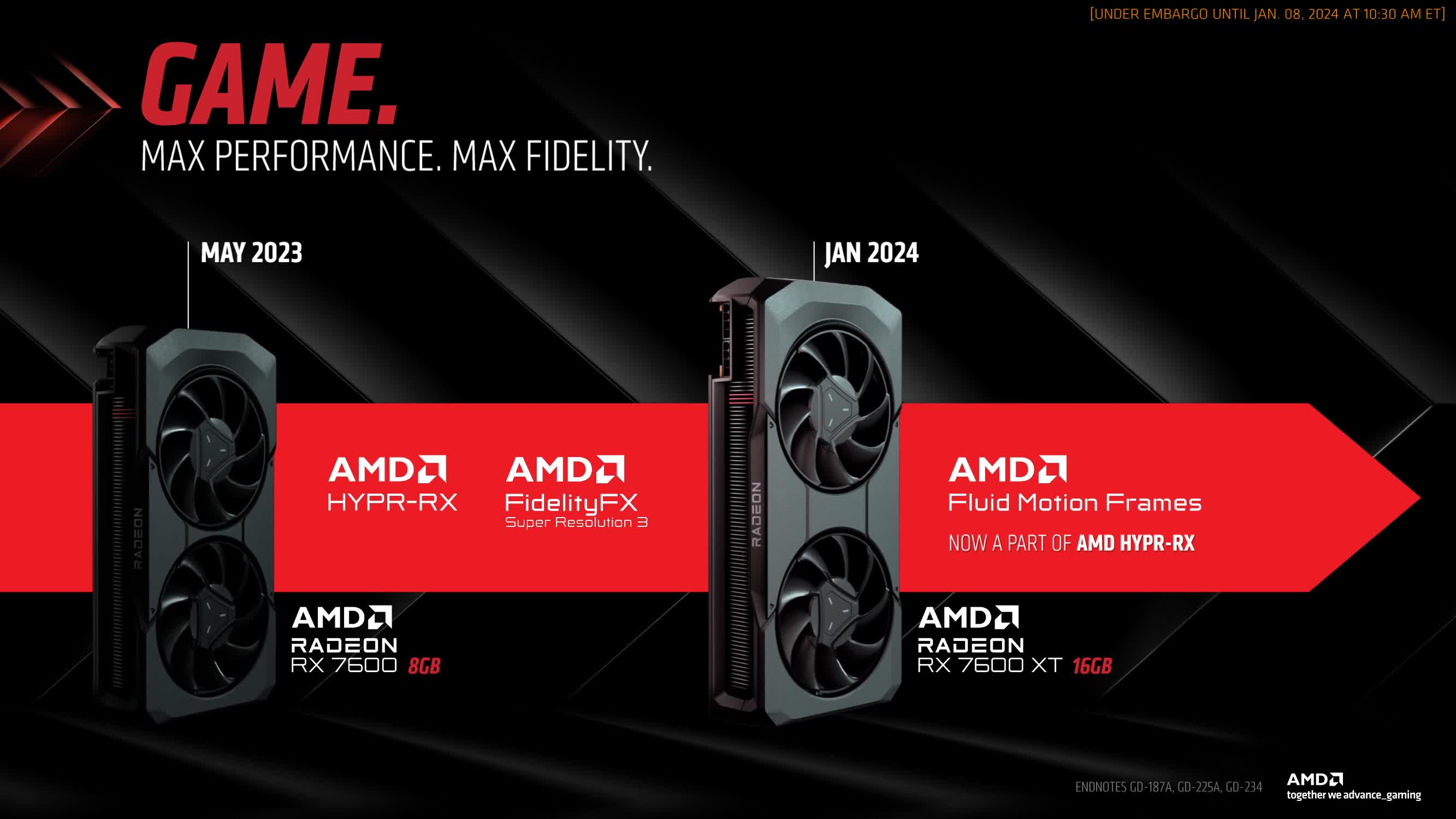

TL;DR: AMD is also launching a graphics card in January, the Radeon RX 7600 XT, priced at $330. This product is straightforward: it's a Radeon RX 7600 with 16GB of memory – doubling the VRAM – and a slight overclock. This places the two 7600 GPUs much closer in performance than the difference observed between the Radeon RX 6600 and RX 6600 XT in the previous generation, which had different core configurations.

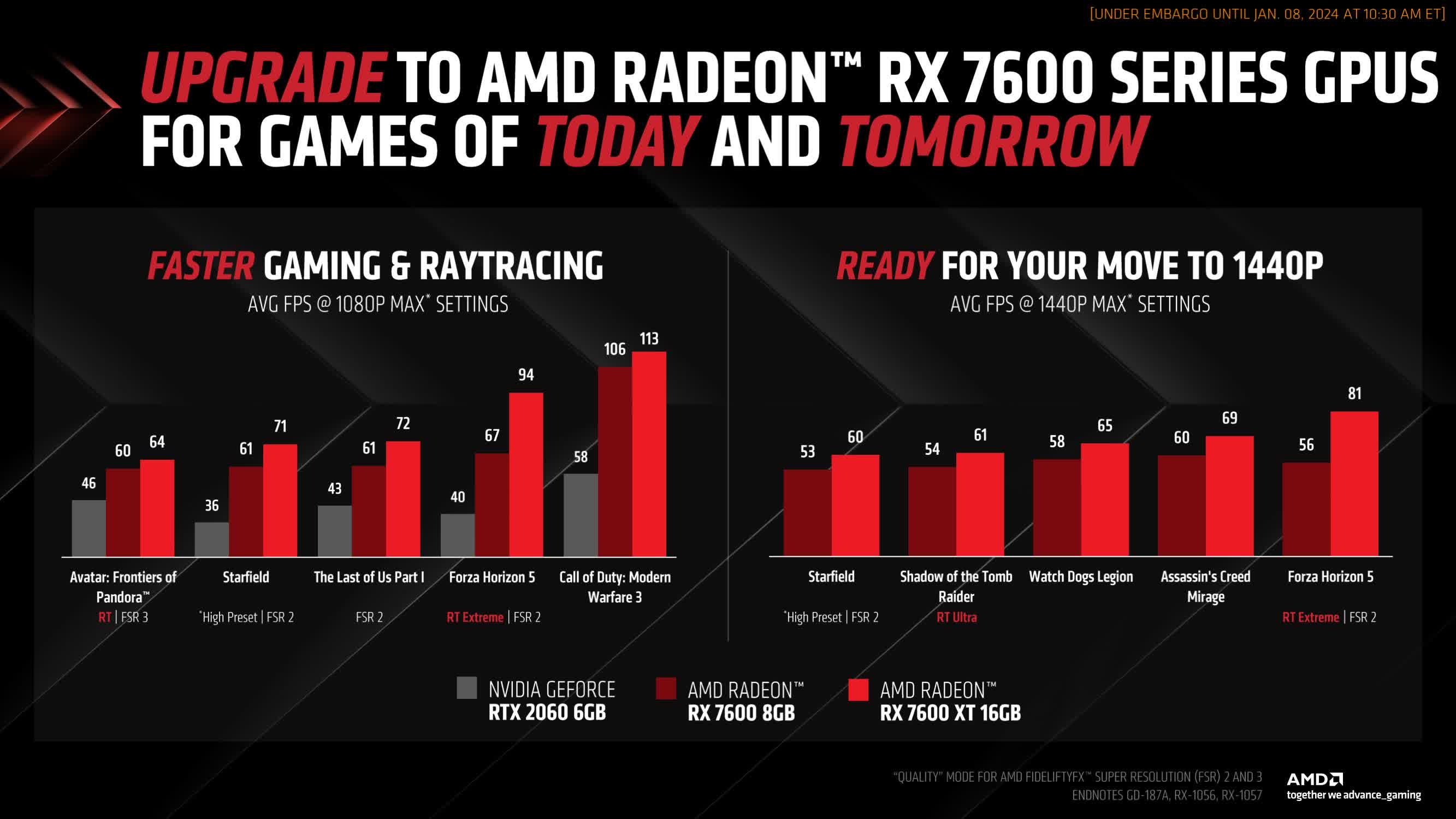

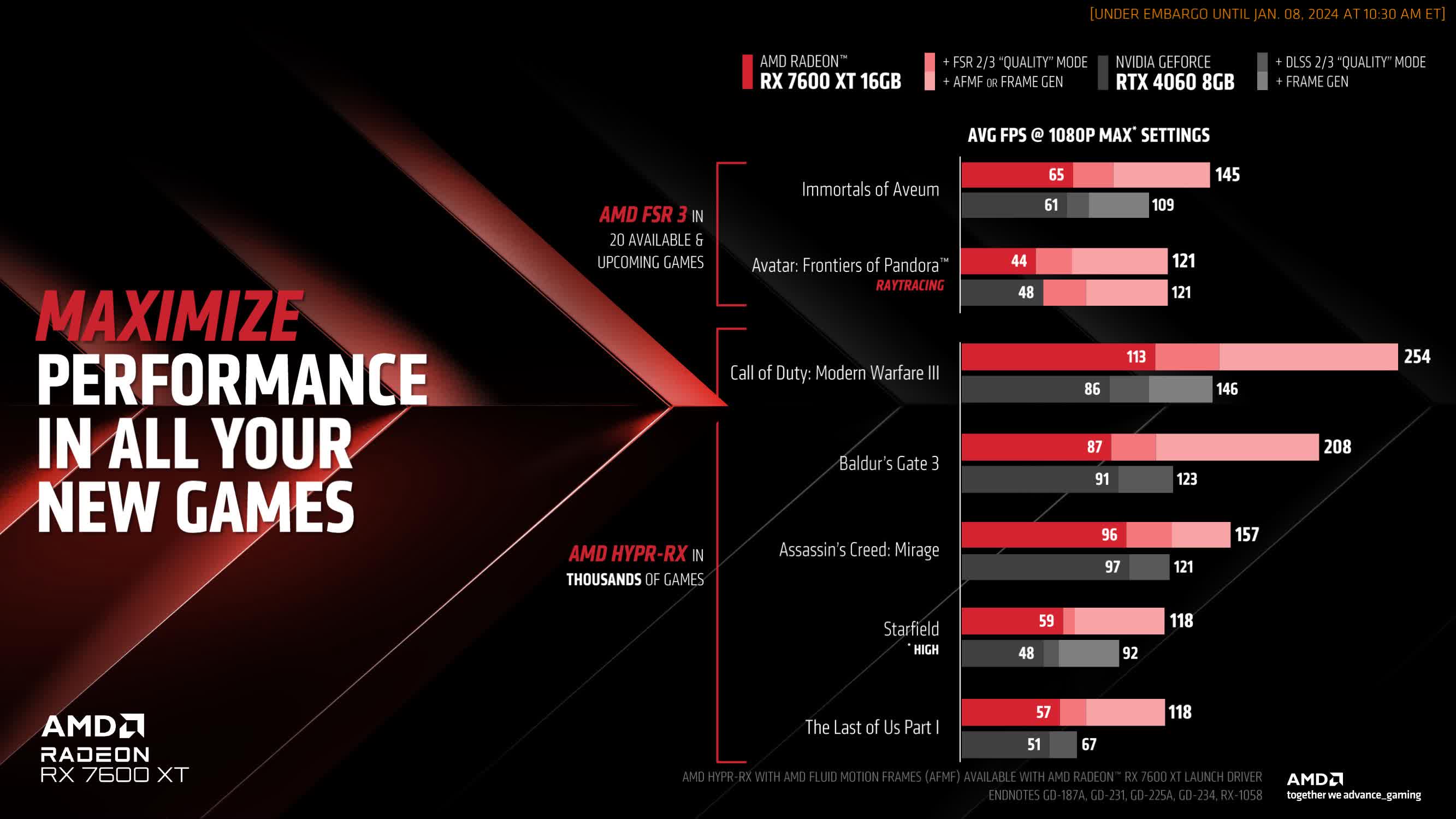

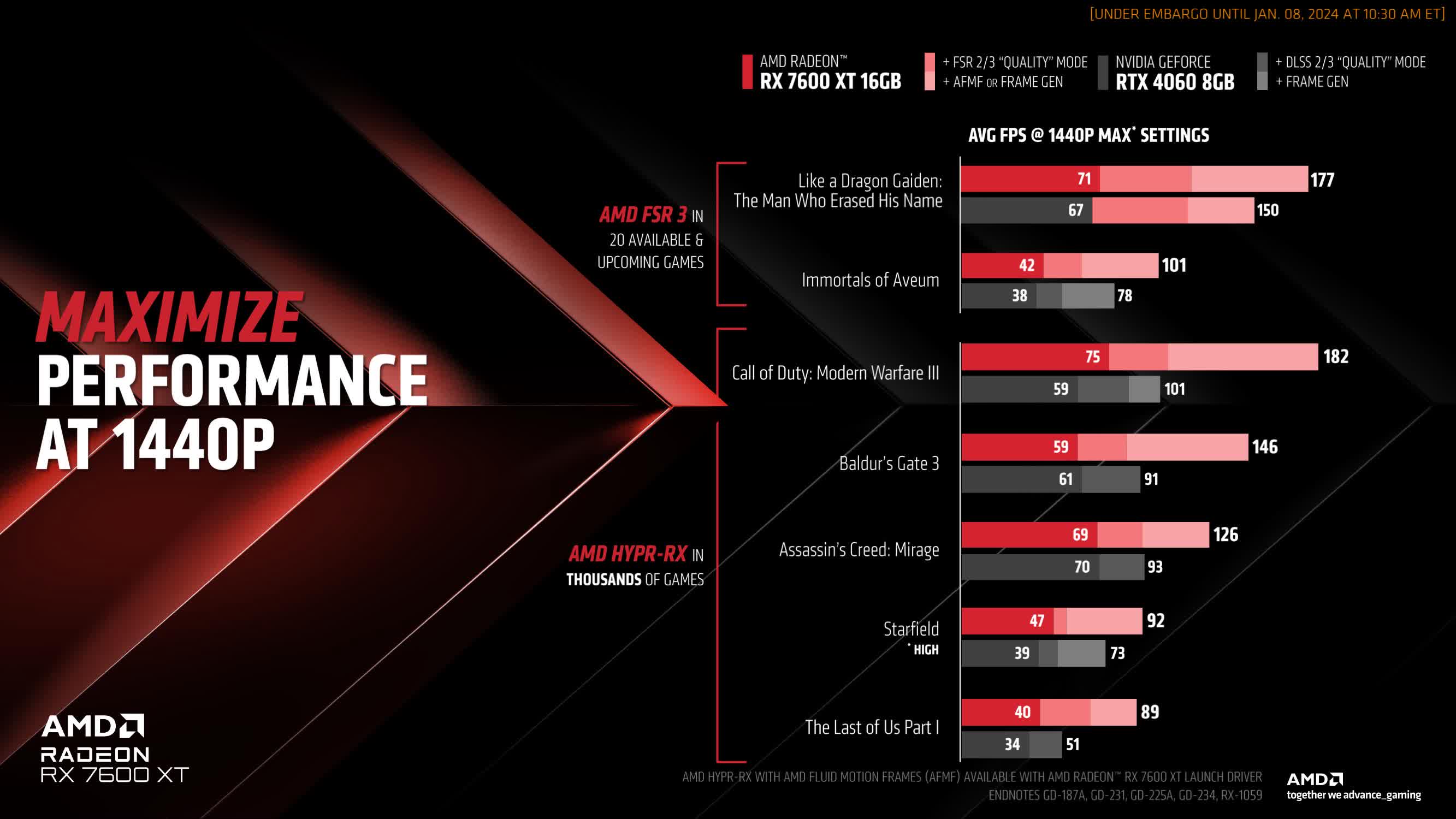

AMD claims substantial performance improvements moving from the 7600 to the 7600 XT, with a chart showing increases ranging from 7 to 40 percent at 1080p, and 13 to 45 percent at 1440p. The larger improvements could be plausible if VRAM capacity is a limiting factor on the 8GB model, especially given the high memory requirements for Ultra setting gaming in many current games.

However, we are less certain about the performance gains in games that are not heavily VRAM limited, such as Starfield. The gains there seem higher than expected based on the hardware differences between the cards, necessitating further benchmarking.

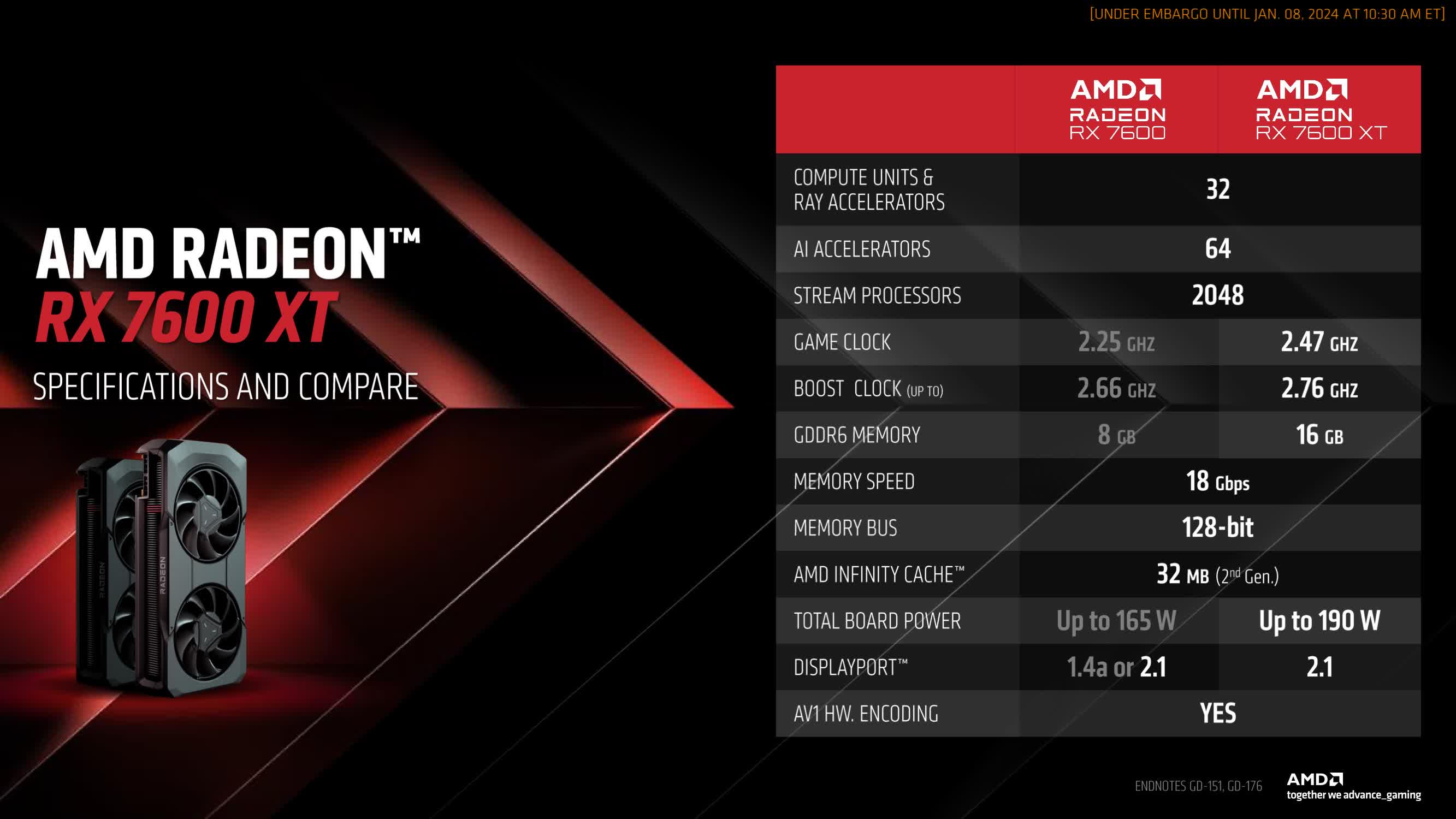

The RX 7600 XT continues to utilize a fully unlocked Navi 33 die with 32 compute units and 2,048 stream processors. AMD claims a 10% higher game clock for the XT model compared to the non-XT, along with 16GB of GDDR6 at the same 18 Gbps on the same 128-bit bus, doubling the capacity while maintaining the same memory bandwidth. The card's board power rating has increased from 165W to 190W to accommodate these changes, marking a 15% increase.

We are somewhat disappointed by AMD's chart, which compares performance both with and without various features enabled. There's no issue with the baseline numbers comparing the 7600 XT against the RTX 4060, or for equivalent comparisons like FSR 3 enabled on both GPUs in Avatar Frontiers of Pandora or Like a Dragon Gaiden. AMD shows the 7600 XT competing closely with the RTX 4060 in these scenarios.

What seems misleading, in our opinion, are the comparisons where AMD applies their driver-based AFMF frame generation feature to "beat" the GeForce GPU in the listed output frame rate. These features do not deliver equivalent image quality, especially when comparing DLSS 2 Quality mode without frame generation to FSR 2 Quality mode with AFMF frame generation. Based on our tests, AFMF does not produce image quality comparable to other configurations in this chart. Yet, by comparing them as equivalent, AMD can present a significant performance "win" with the new 7600 XT.

It's not great when Nvidia compares RTX 40 GPUs to RTX 30 GPUs and shows the new 40 series cards having a "performance win" through DLSS 3 frame generation – we've extensively explained why frame generation is not a performance-enhancing technology. They are also guilty of this with the Super series data, although, to their credit, they did provide other more relevant numbers that didn't include frame generation FPS.

The more companies pursue the highest FPS number through software features (upscaling, frame generation, etc.) without considering image quality, the worse these comparisons become, and the more useless or misleading the information is for consumers.

With AMD's numbers, the situation is even worse because they are directly comparing Radeon with GeForce – something Nvidia only does with their own products. Moreover, the difference in image quality between AFMF and basic upscaling or native is much larger than that of DLSS 3 frame generation. The more companies pursue the highest FPS number through software features without considering image quality, the worse these comparisons become, and the more useless or misleading the information is for consumers. At least AMD did provide native apples-to-apples numbers for a proper comparison.

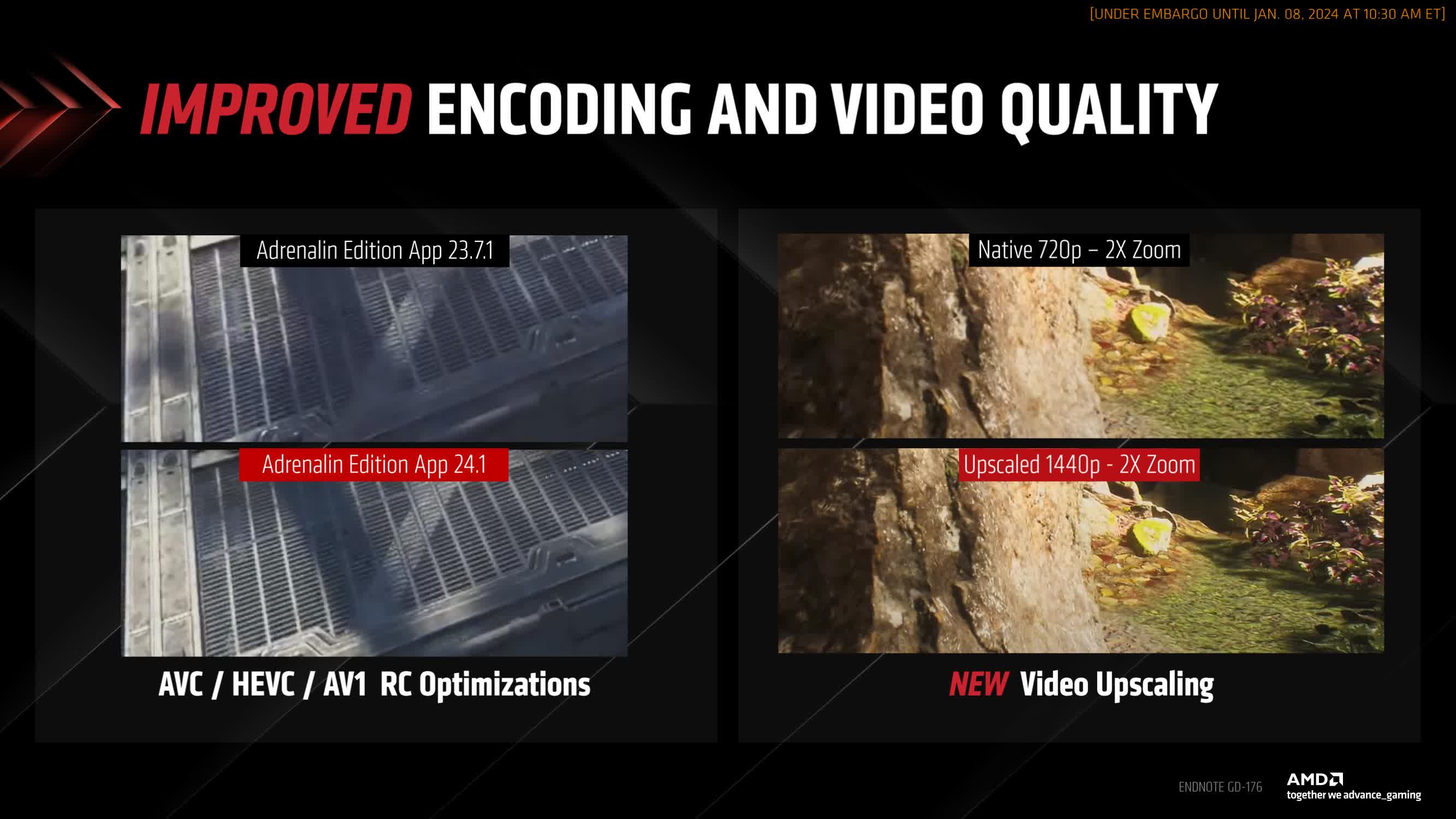

On a more positive note, AMD claims to have improved encoding image quality through rate control optimizations in the latest version of their SDK. This has been implemented in the latest version of Radeon Software 24.1 and applies to H.264, HEVC, and AV1 encoding. Streaming image quality on Radeon GPUs, particularly with H.264, has been criticized for some time now, so it's encouraging to see AMD working on this. Third-party apps will need to update to the latest version of the AMF SDK to benefit from these enhancements, but the prospects look promising.

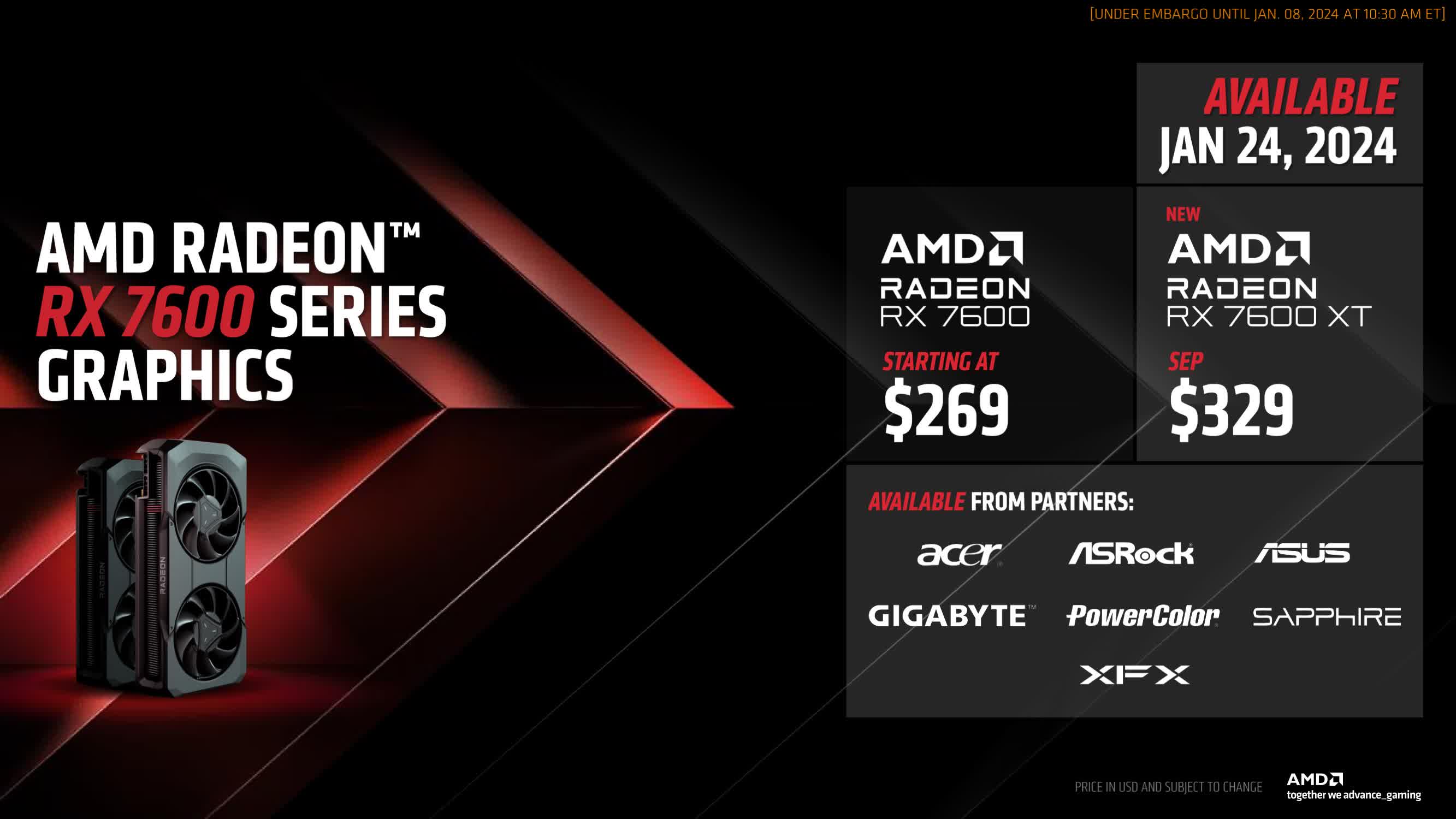

The price of the Radeon 7600 XT doesn't seem exceptional, with the MSRP $60 higher than the RX 7600 for a minor clock increase and double the VRAM. Real-world pricing could be as much as $80 higher, given that we have seen the 7600 fall to $250 at times, and the 7600 is not an exceptionally priced product to begin with.

We give some credit to AMD for making their most affordable 16GB graphics card yet and offering more VRAM in the sub-$350 price tier, but it feels a bit expensive relative to the RX 7600. It's priced similarly to the still-available RX 6700 XT with 12GB of VRAM, which can be found for $320. The 6700 XT is likely faster, so it will be interesting to see where it ranks in Steve's benchmarks.

Our initial feeling is that AMD may have missed an opportunity to exert significant pressure on the RTX 4060. The Radeon RX 7600 (non-XT) and GeForce RTX 4060 offer similar performance, so if AMD could offer a slight performance advantage as well as double the VRAM at the same $300 price as the RTX 4060, that would be quite compelling. However, at $330, we are yet to be convinced.

https://www.techspot.com/news/101435-amd-latest-radeon-rx-7600-xt-double-vram.html