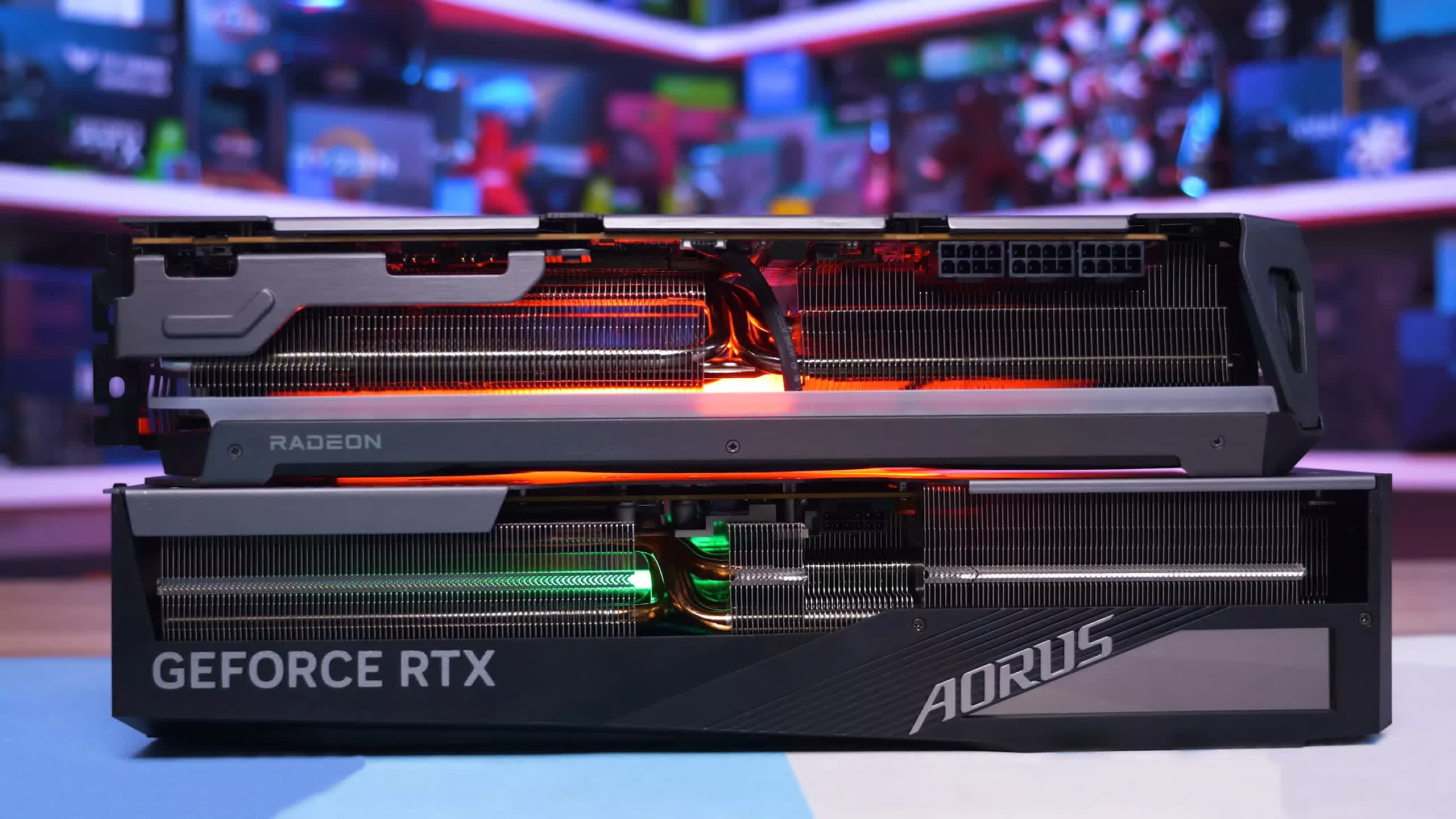

New year, new GPU comparison. Today we're taking an updated look at the current generation flagship, the battle between the Radeon RX 7900 XTX and GeForce RTX 4090. Of course, we know the GeForce GPU is faster, but it also costs $2,000 whereas you can pick up a 7900 XTX for just under $1,000, or about half the price. That's a massive difference, so is the GeForce really worth such a significant premium?

For all the AMD fans screaming at their monitors, we know the 7900 XTX more directly competes with the RTX 4080... not the exorbitantly priced RTX 4090. We've already made that comparison, comparing the Radeon 7900 XTX and RTX 4080 in a range of games, featuring extensive rasterization, ray tracing, and even DLSS vs FSR upscaling benchmarks. So, you can check out that review if that's the comparison you're interested in.

Back to this comparison, it actually came about because of a recent reader question, where someone asked if we would purchase an RTX 4080 or a hypothetical "GTX 4090," a GPU with the rasterization performance of the RTX 4090 but priced like the RTX 4080, as it lacked tensor cores and therefore couldn't take advantage of RTX features.

We were divided in opting for the RTX 4080 or preferring the GTX 4090, the latter which would be a better GPU for someone like me who mostly plays competitive multiplayer shooters. Surprisingly, a significant number of readers claimed we already have a GTX 4090, also known as the Radeon RX 7900 XTX.

But last we checked, the Radeon 7900 XTX was often quite a bit slower than the RTX 4090 in rasterization performance, so has something changed since our last review? It seems unlikely, but several new and visually stunning games were released late last year, so an updated comparison seemed appropriate, so here we are.

For testing, we're using our Ryzen 7 7800X3D test system with 32 GB of DDR5-6000 CL30 memory. The display drivers used were Game Ready 546.33 and Adrenalin Edition 23.12.1. It's time we delve into the data, so let's do it…

Benchmarks

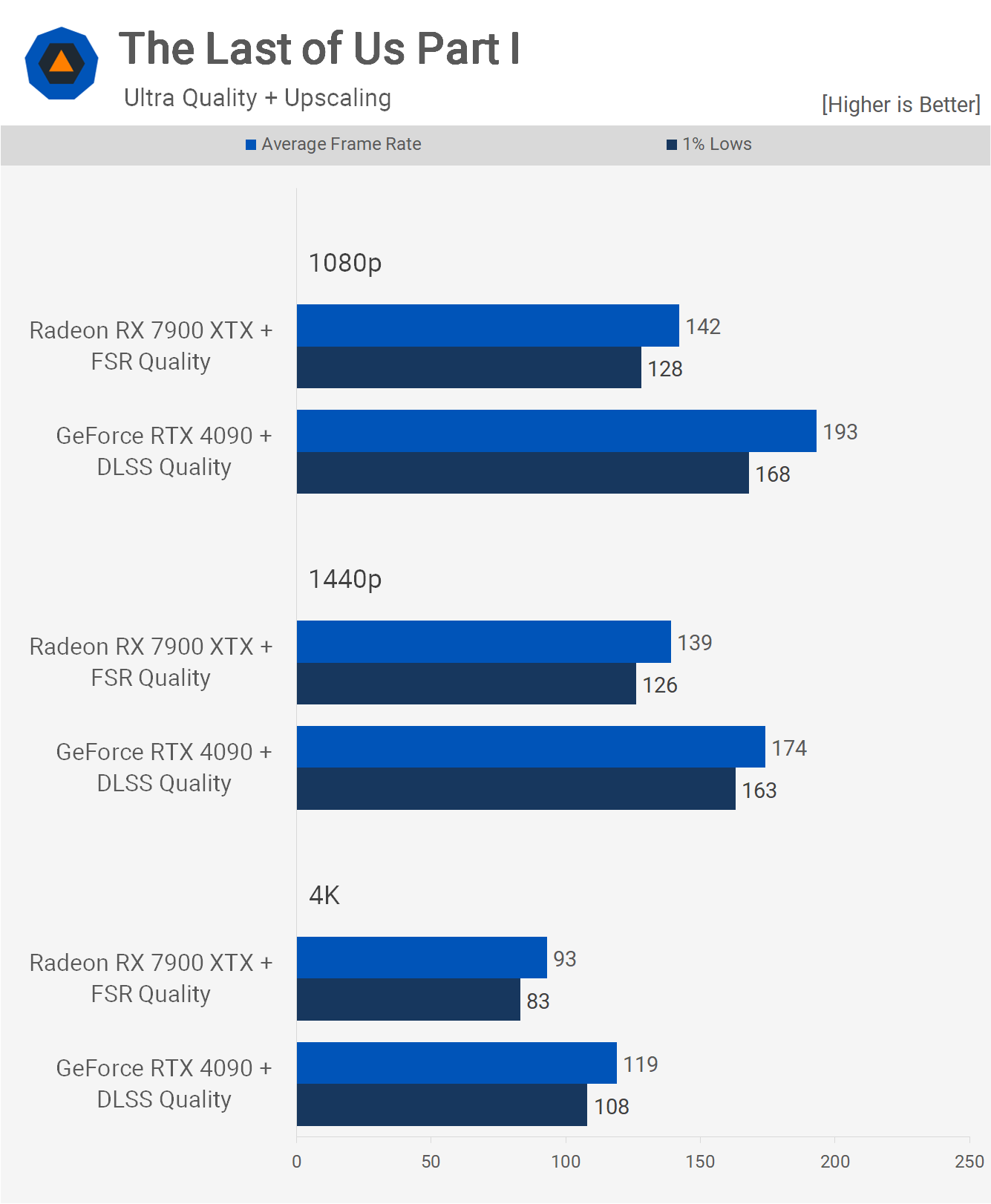

First up, we have results for the most up-to-date version of The Last of Us Part I, and here the 7900 XTX was 23% slower at 1080p, 20% slower at 1440p, and 21% slower at 4K. So, good results overall for the XTX, particularly given that at MSRP it's almost 40% cheaper, and at 4K, we're looking at over 60 fps without having to enable upscaling.

Speaking of which, when using the FSR and DLSS quality modes, we see significant frame rate increases for both GPUs in this title. Here the XTX was 26% slower at 1080p, 20% slower at 1440p, and 22% slower at 4K.

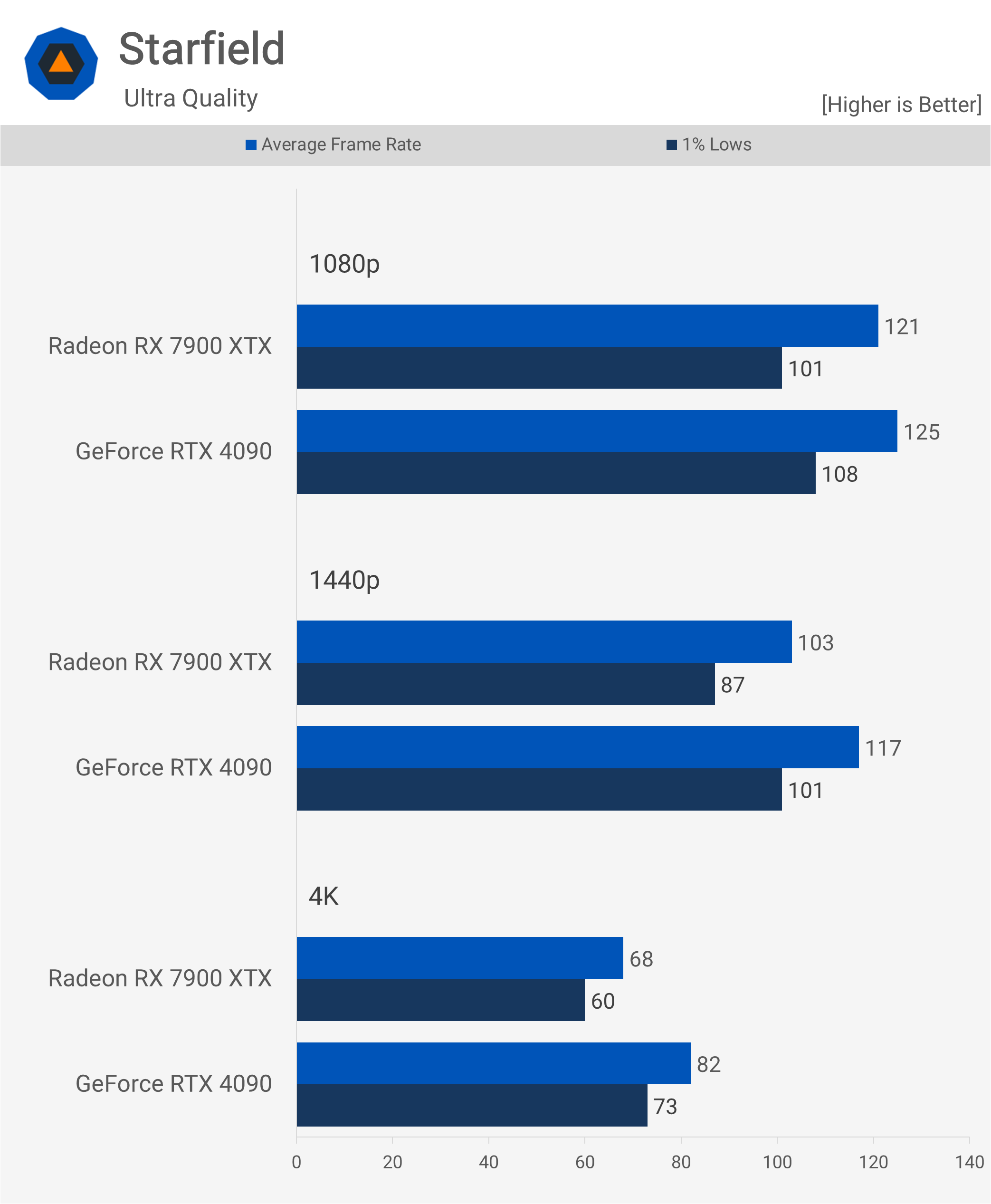

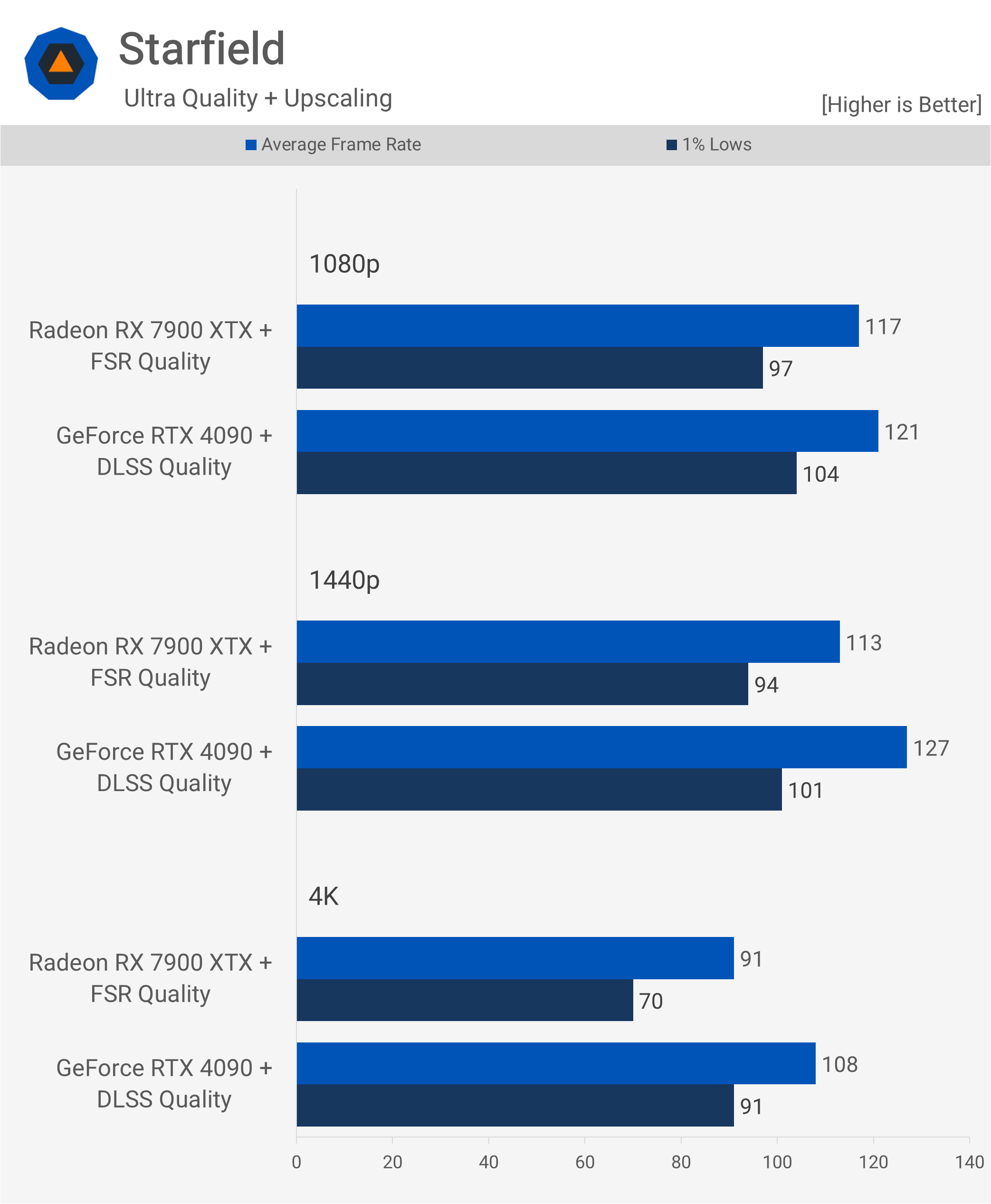

Next, we have Starfield, and again this data is based on the most up-to-date version of the game, at the time this video went live. At 1080p, the 7900 XTX is just 3% slower than the RTX 4090, then 12% slower at 1440p, and 17% slower at 4K, making for another set of positive results for the much cheaper Radeon GPU.

Even if we enable upscaling, the margins remain much the same, with the XTX being 3% slower at 1080p, 11% slower at 1440p, and 16% slower at 4K.

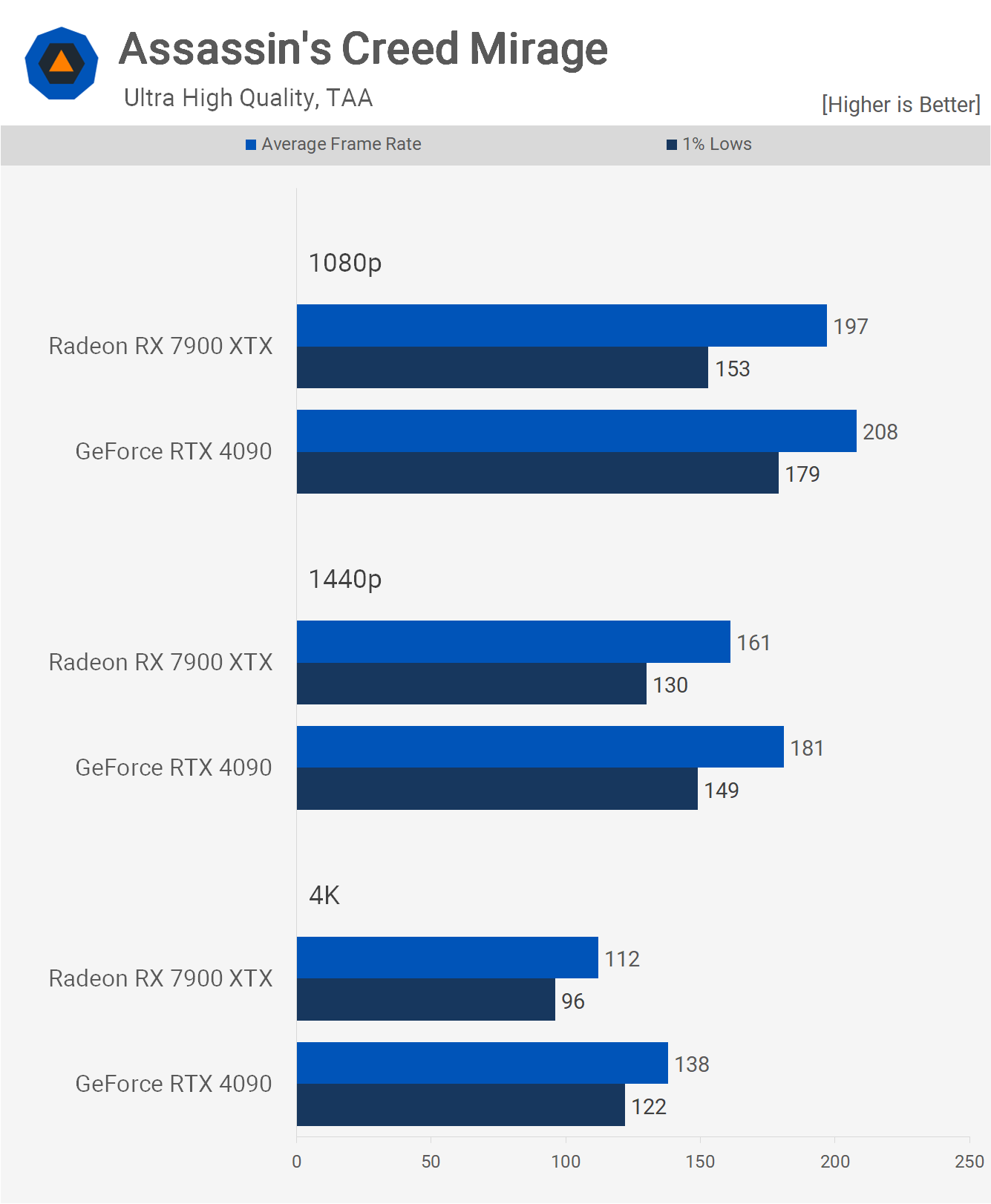

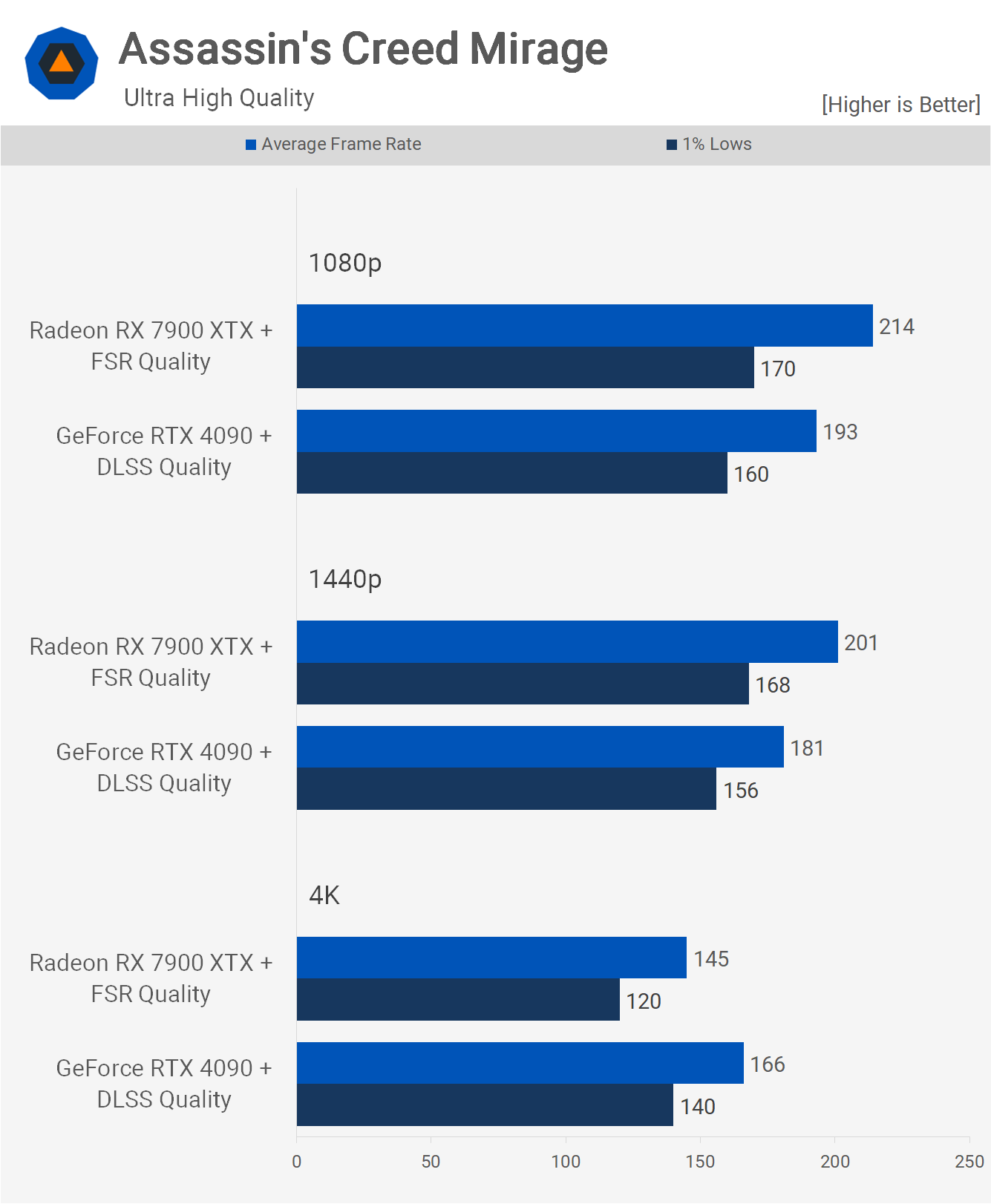

Performance in Assassin's Creed Mirage is very competitive; here the 7900 XTX was just 5% slower at 1080p, 11% slower at 1440p, and 19% slower at 4K. We're also looking at well over 100 fps at 4K, using the highest quality preset.

Now, with FSR and DLSS enabled, the 7900 XTX can beat the RTX 4090 at 1080p and 1440p, offering 11% more frames at 1440p. That said, the Radeon GPU does fall behind at 4K, though only by a 13% margin.

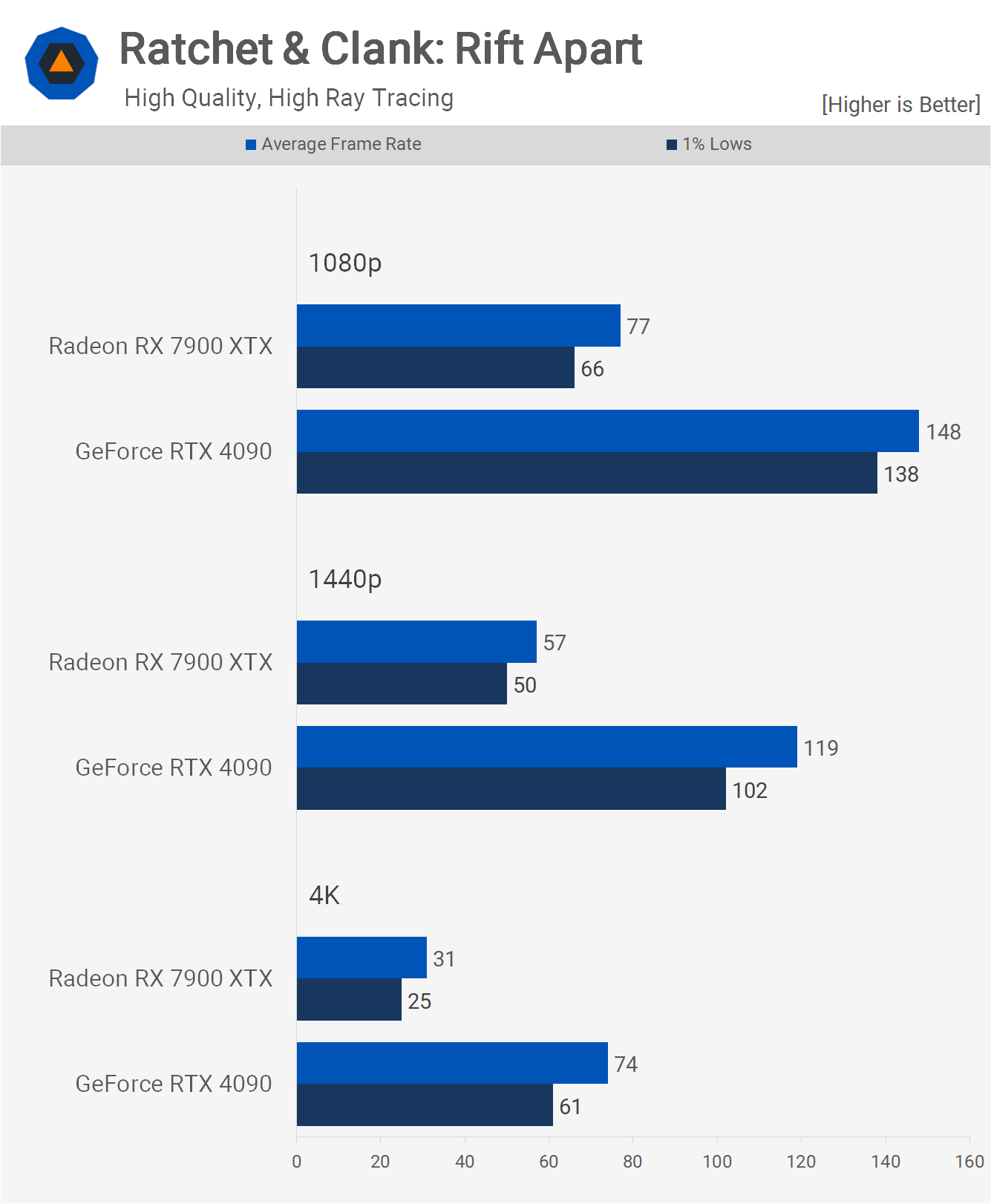

Moving on to Ratchet & Clank: Rift Apart, we see that neither GPU has any issues with this title, though, as expected, the RTX 4090 is faster. The XTX trailed by a 16% margin at 1080p, 20% at 1440p, and then just 8% at 4K.

However, what significantly impacts the Radeon GPU in this title is ray tracing. Switching it on using high settings, performance falls off significantly. We're now looking at 77 fps at 1080p, making it almost 50% slower than the 4090. Actually, at 1440p, it's 52% slower and an incredible 58% slower at 4K, or another way of looking at it, the RTX 4090 is nearly 140% faster at 4K.

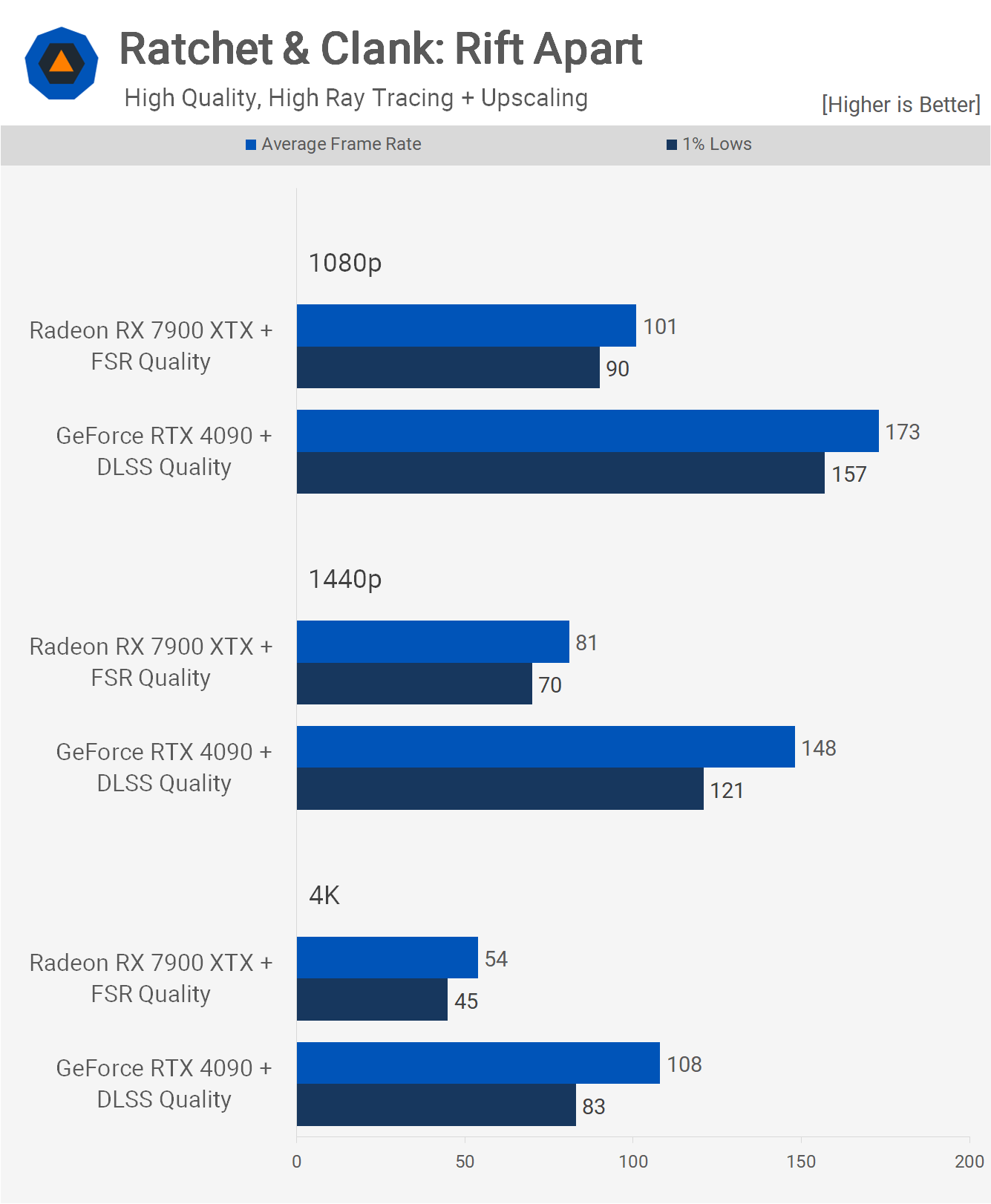

Enabling upscaling does help the 7900 XTX relative to the 4090, as now it's 42% slower at 1080p, 45% slower at 1440p, and 50% slower at 4K.

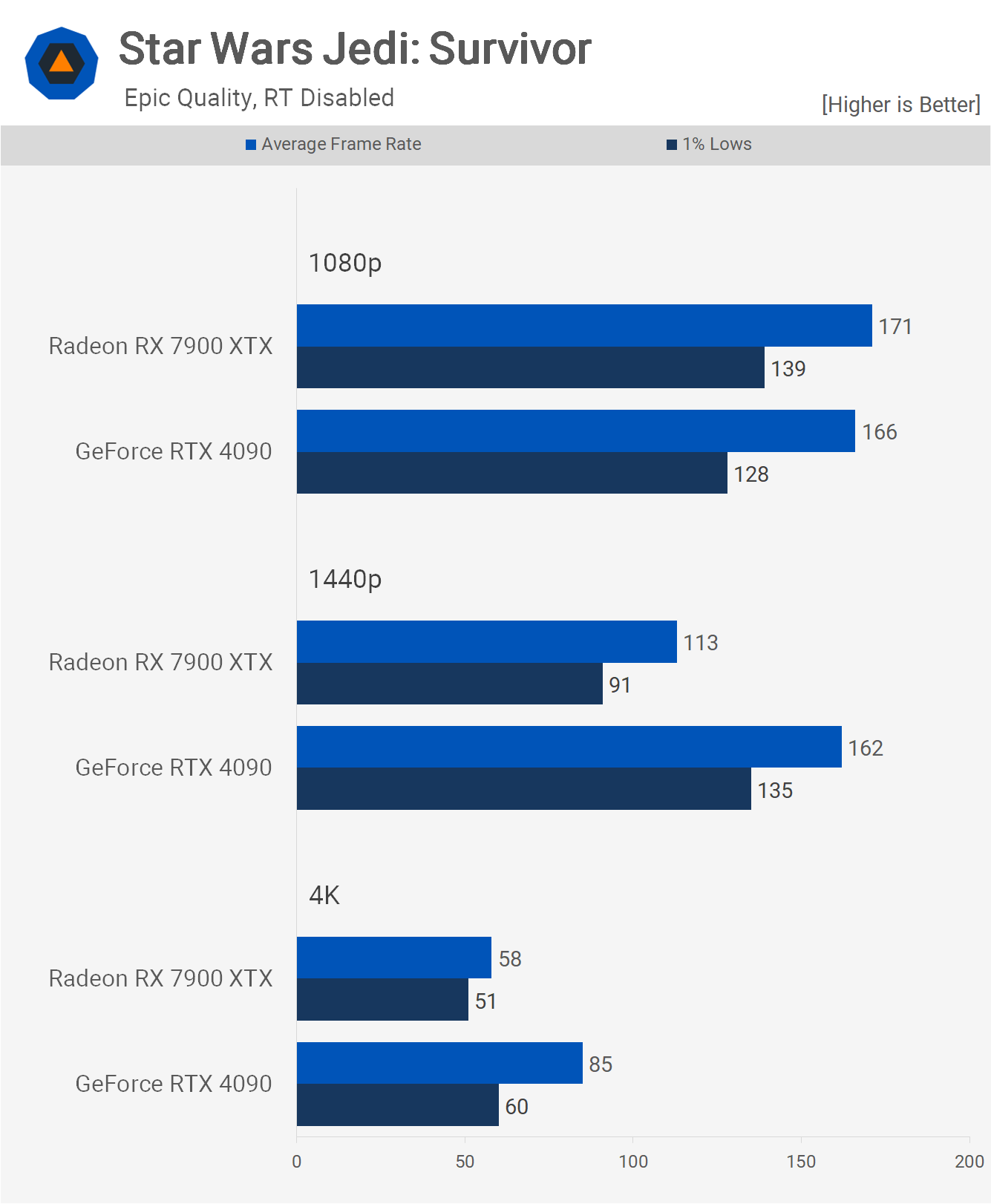

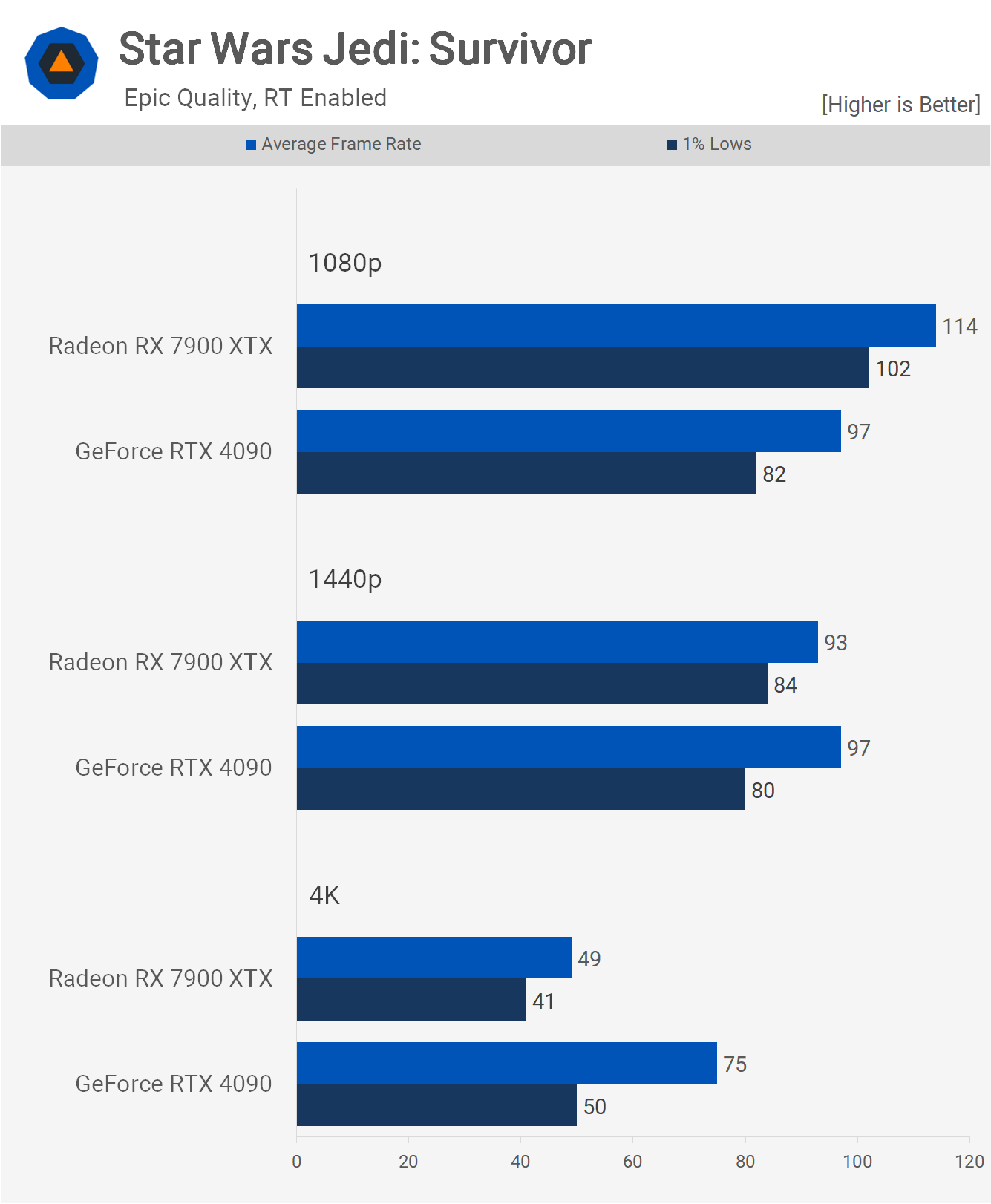

Next up, we have Star Wars Jedi: Survivor, and here we find some heavily CPU-limited results at 1080p, though frame rates are well above 150 fps. Still, the Radeon GPU manages to take the lead due to requiring less CPU processing power, nudging ahead by a mere 3% margin.

But once we hit the 1440p resolution, the 7900 XTX configuration becomes heavily GPU-limited, and now it's 30% slower and then 32% slower at 4K.

Now with ray tracing enabled, the CPU load increases, and as a result, the 7900 XTX offers a rather substantial 18% performance increase over the RTX 4090, as the added CPU overhead from the GeForce GPU sees it become CPU-limited much earlier. Because of this, even at 1440p, the margins are very similar, and it's not until we hit the 4K resolution that the results are GPU-limited, with the Radeon GPU falling behind by a 35% margin.

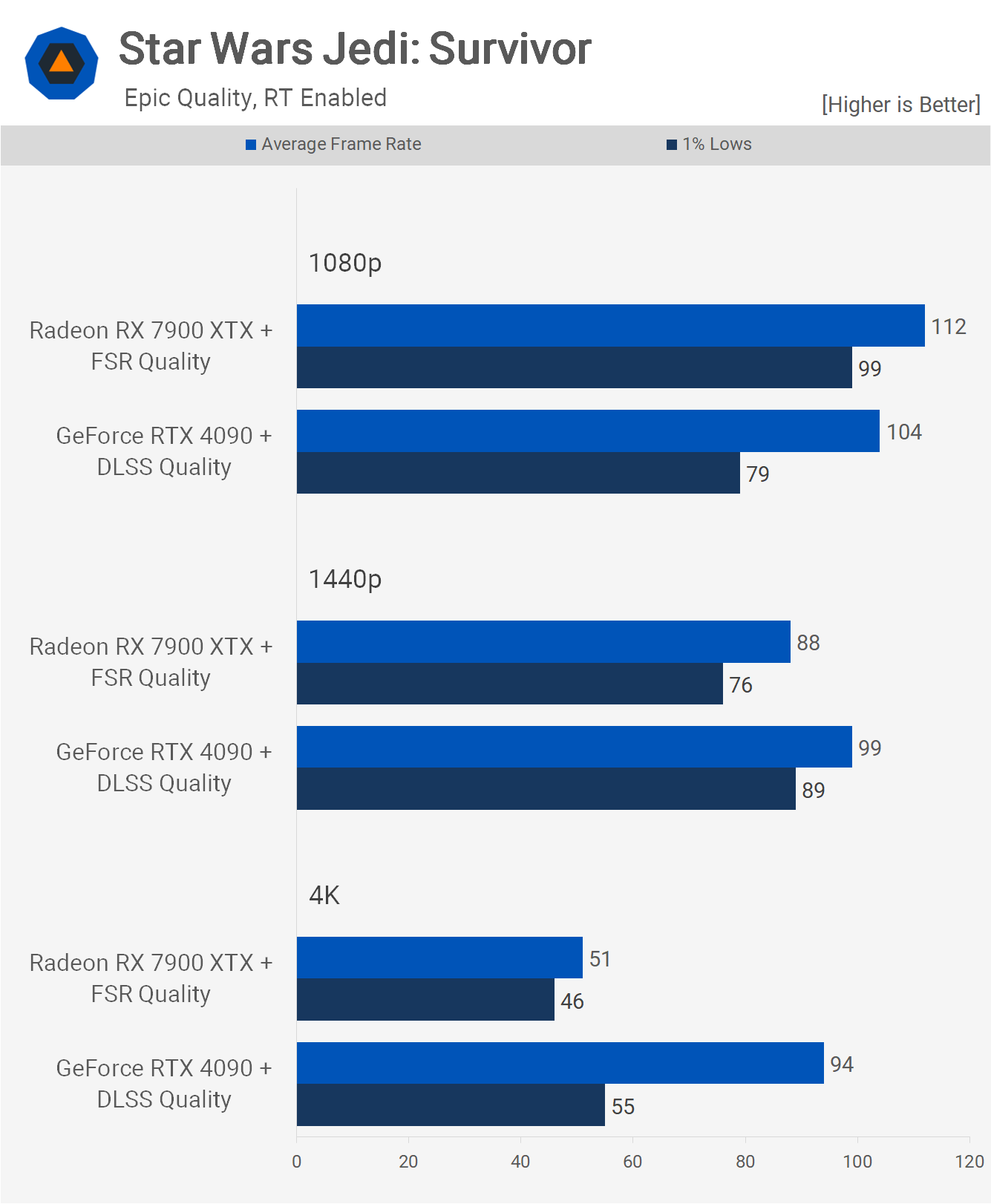

Enabling FSR and DLSS doesn't do much in this title as we are CPU-limited for the most part at 1080p and 1440p, and in fact, the overhead of these upscaling technologies actually reduces performance in some instances. But at 4K, where we are GPU-limited, the 7900 XTX only saw a 4% increase, whereas DLSS offered a much more substantial 25% boost for the RTX 4090.

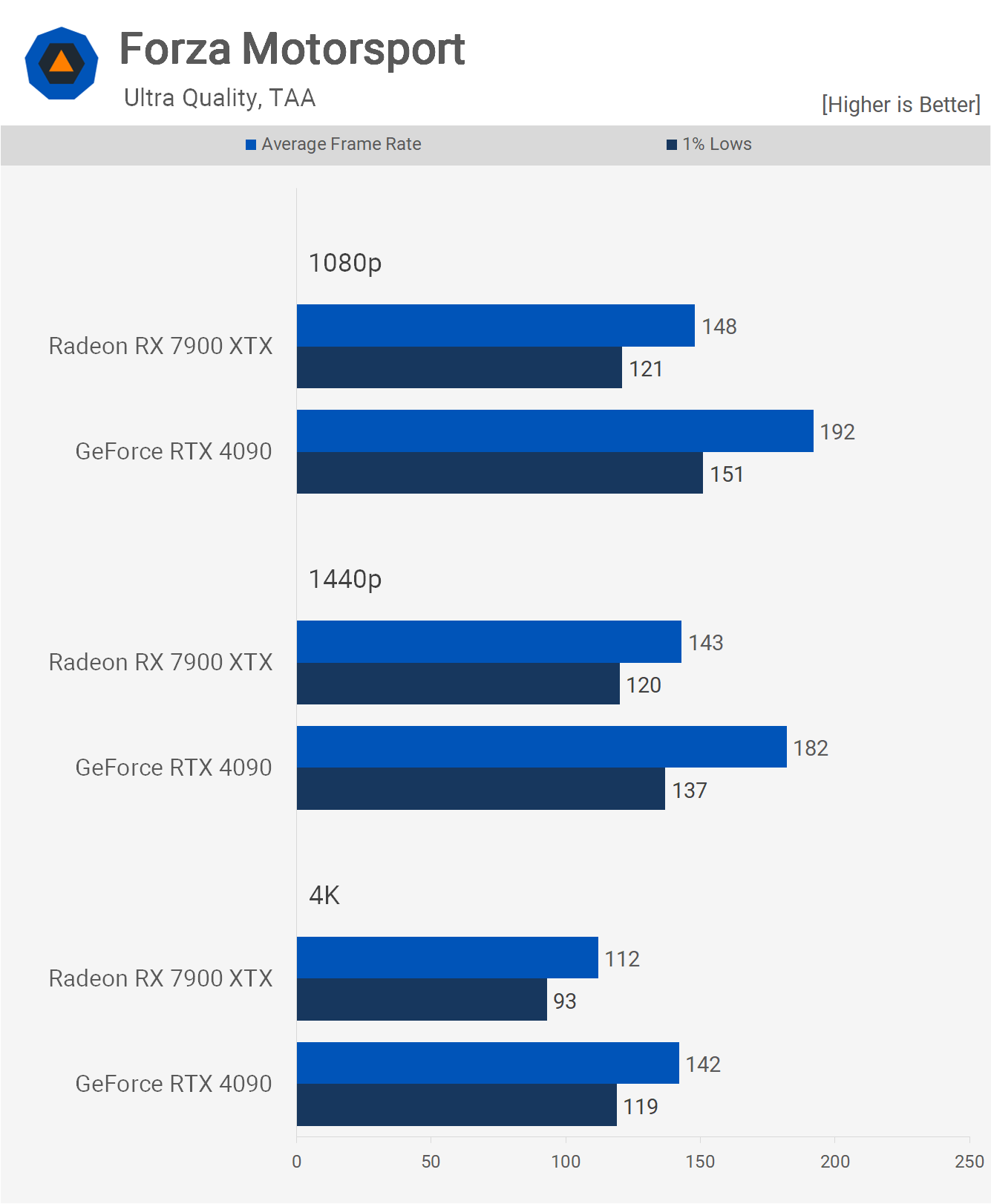

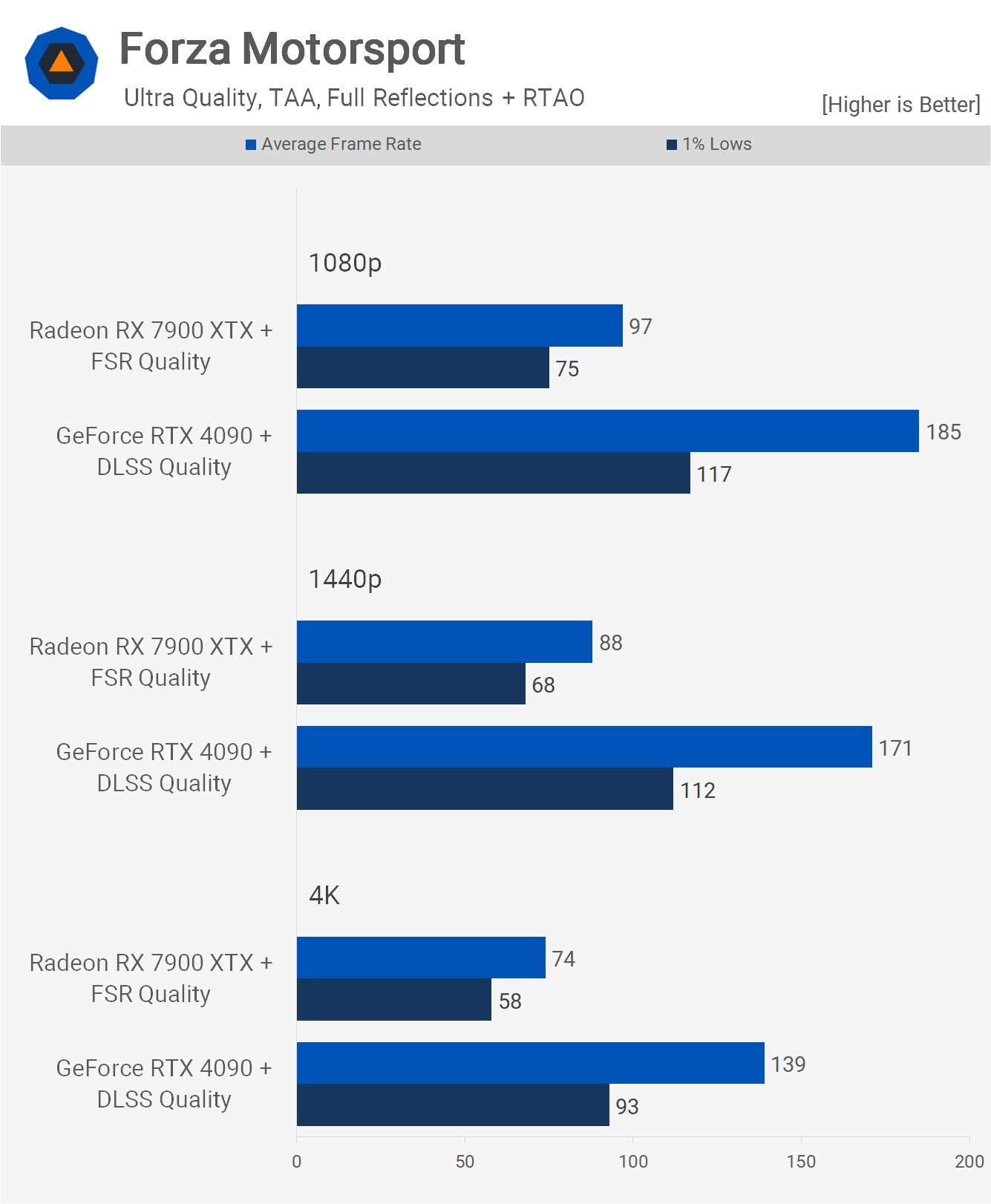

Forza Motorsport plays well using either GPU with the ultra-quality preset, but even so, the RTX 4090 is faster, resulting in the 7900 XTX being 23% slower at 1080p and then 21% slower at 1440p and 4K.

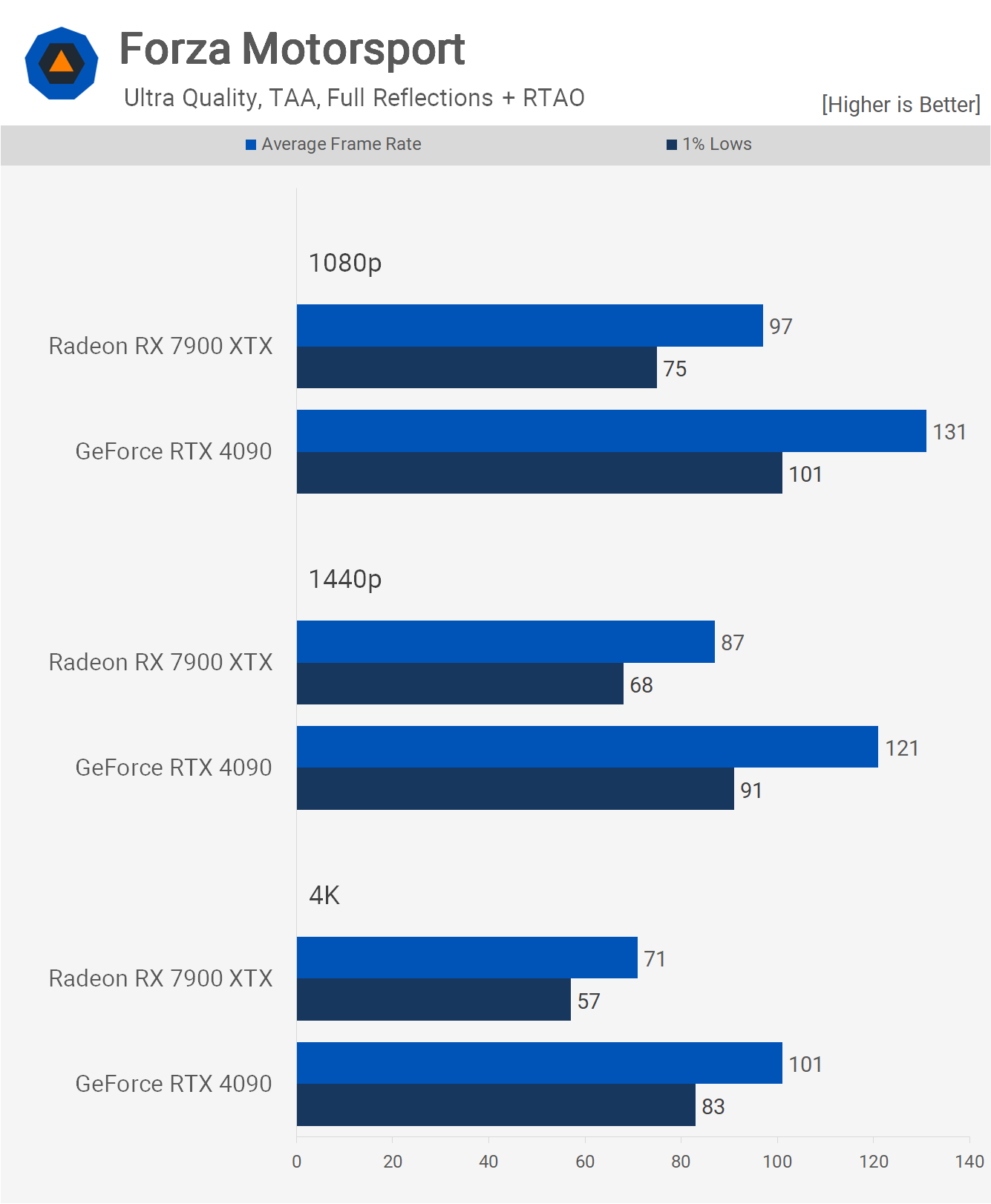

Enabling full RT effects further favors the GeForce GPU, and now the 7900 XTX was 26% slower at 1080p, 28% slower at 1440p, and 30% slower at 4K.

Again, we have another title where FSR doesn't appear to be working correctly, as we see almost no change in performance for the 7900 XTX with it enabled. Apparently, DLSS had been broken in the past for this title, but that no longer appears to be the case, as we're seeing roughly a 40% increase across the board. So, a massive performance advantage here for the RTX 4090.

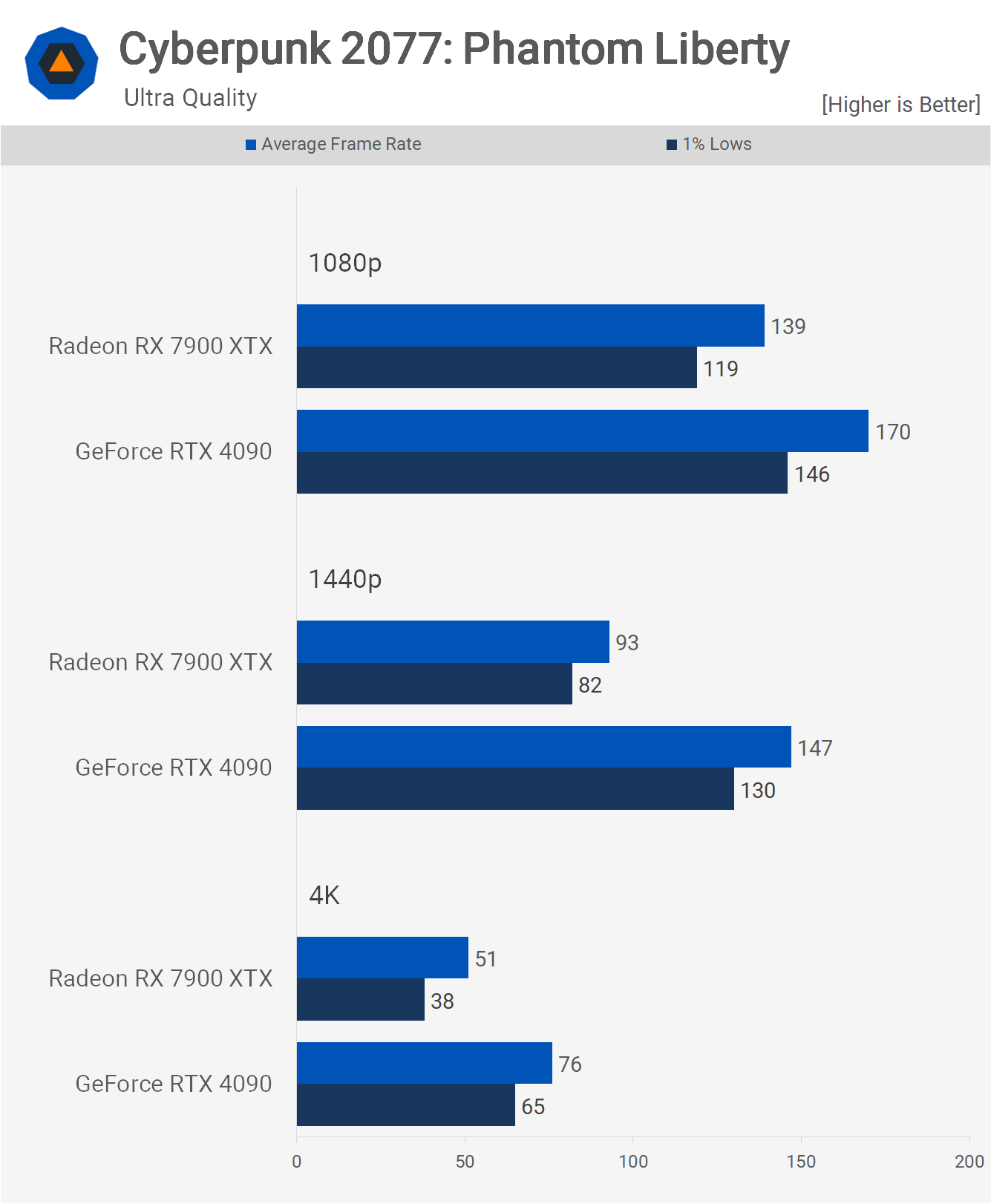

Next up, we have Cyberpunk 2077: Phantom Liberty, and without ray tracing enabled but using the Ultra preset, the 7900 XTX performs very well, rendering 139 fps at 1080p, making it just 18% slower than the RTX 4090.

However, at the more GPU-limited 1440p resolution, the margin increases to 37% and then 33% at 4K.

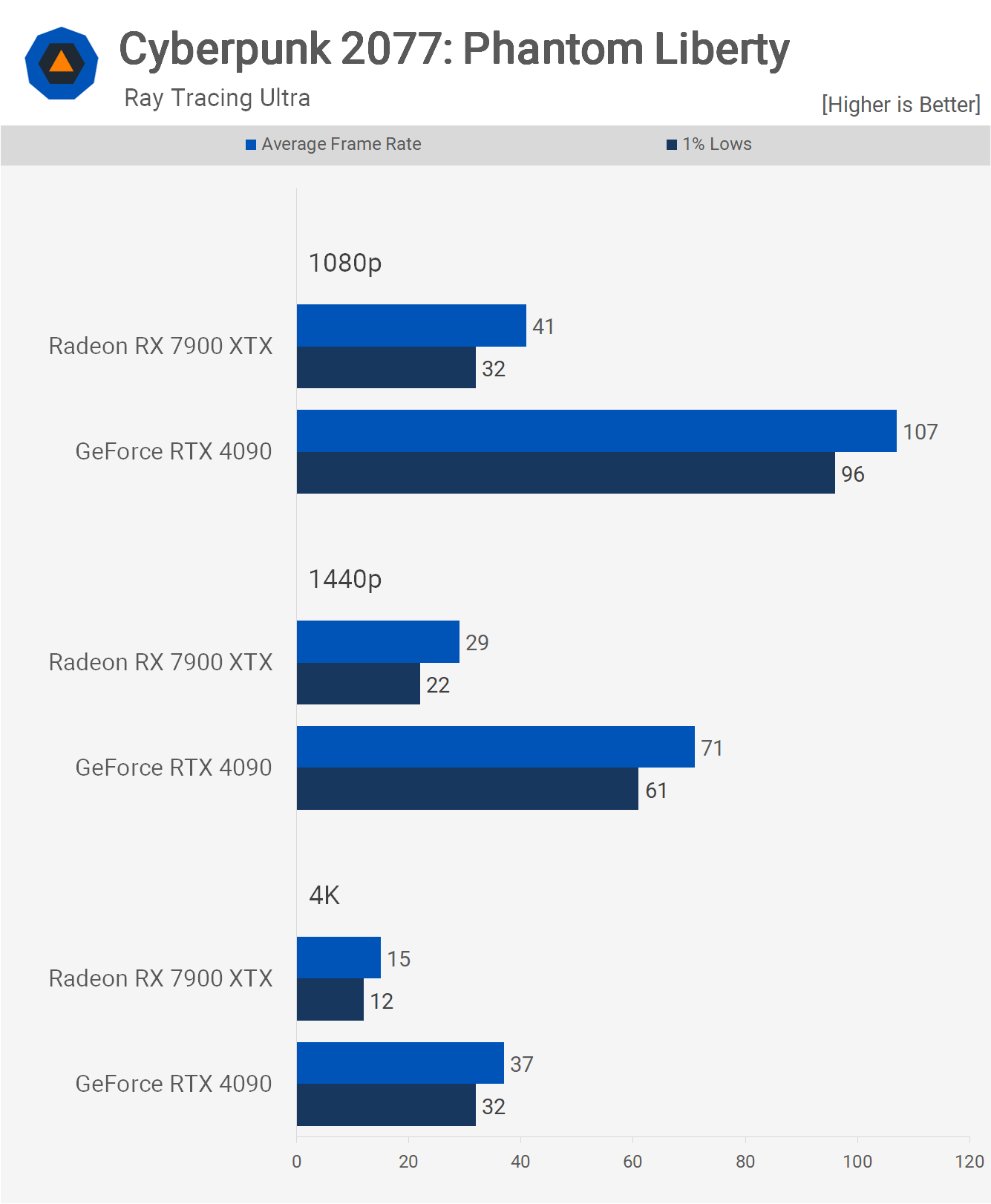

But that's not all. If we enable the Ray Tracing Ultra preset, the 7900 XTX struggles significantly, rendering just 41 fps at 1080p, making it 62% slower, or the 4090 161% faster. The margins remain similar at 1440p and 4K, so this is a disappointing set of results for AMD.

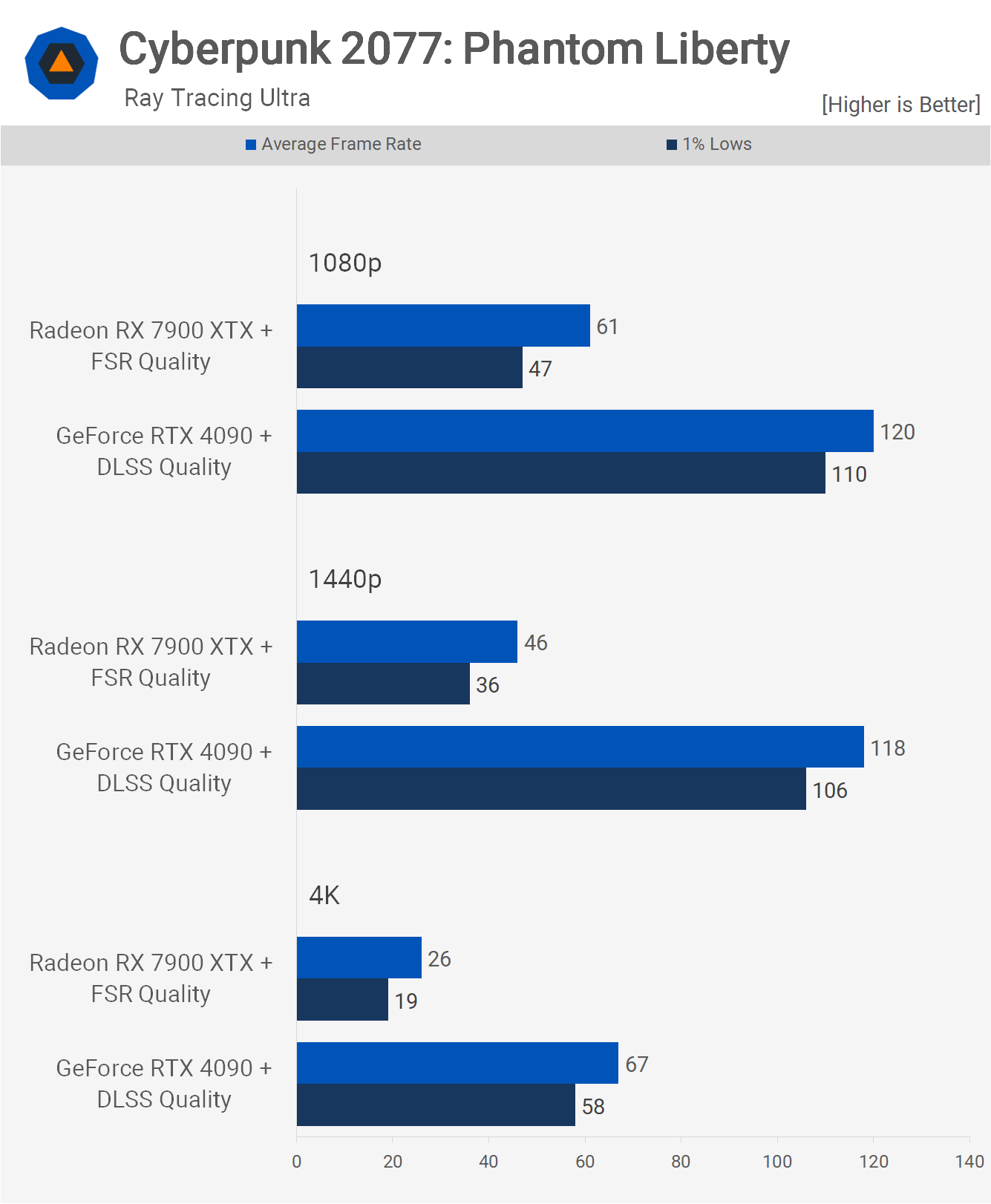

Now, enabling upscaling allows the 7900 XTX to average 61 fps at 1080p, but that's half the performance we're seeing from the RTX 4090, so again, disappointing results for AMD.

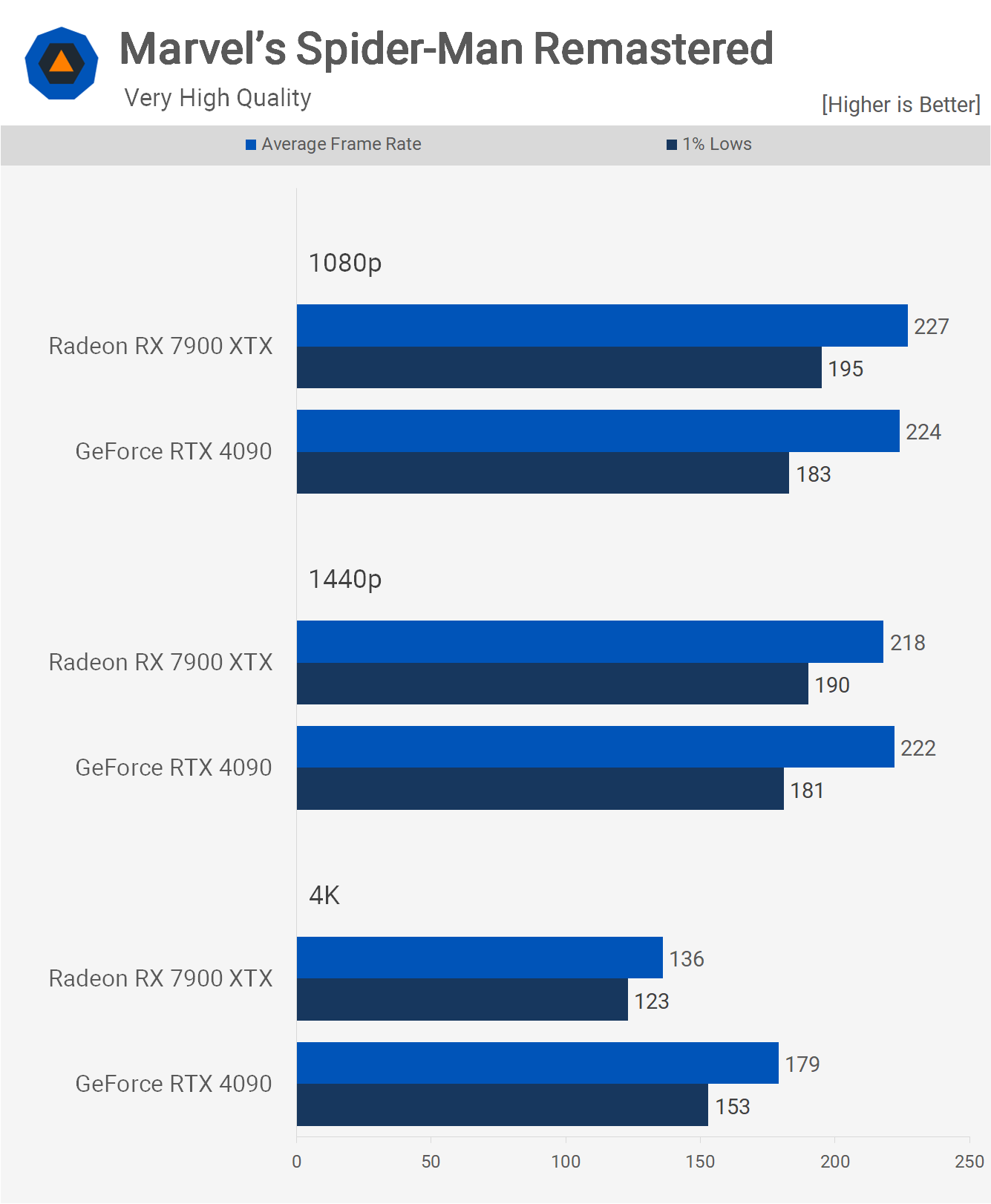

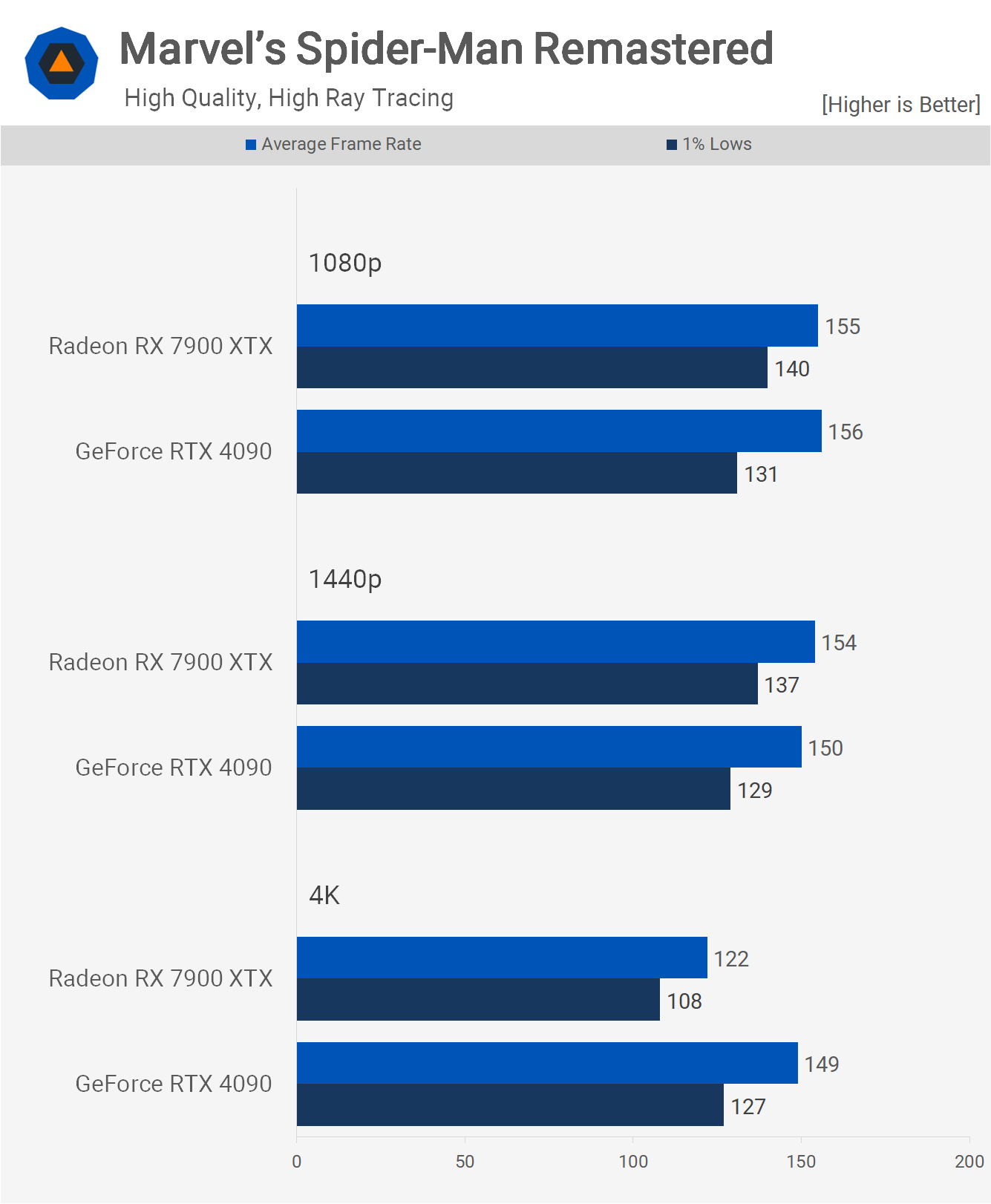

Marvel's Spider-Man Remastered is a well-optimized title, so much so that we end up CPU-limited even at 1440p. Therefore, we have to turn to 4K to see a difference in performance between these two GPUs, where the XTX falls behind by a 24% margin.

Enabling ray tracing doesn't further favor the GeForce GPU as the game becomes more CPU-limited, though the XTX was still 18% slower at 4K. But, had the RTX 4090 not been capped at around 150 fps, the margin could have been larger.

With the game CPU-limited, enabling upscaling doesn't do much, though it does help the 7900 XTX close the gap at 4K.

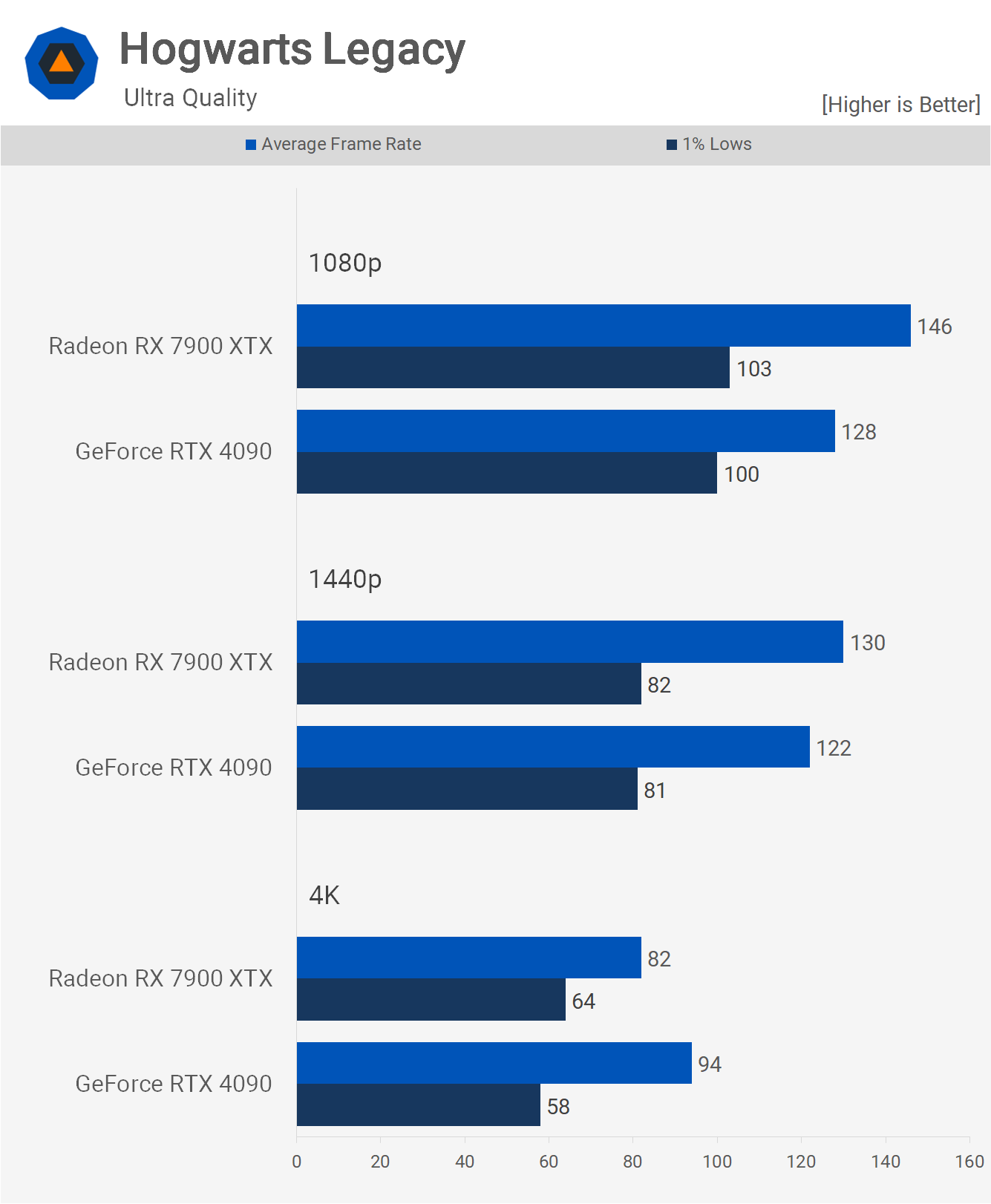

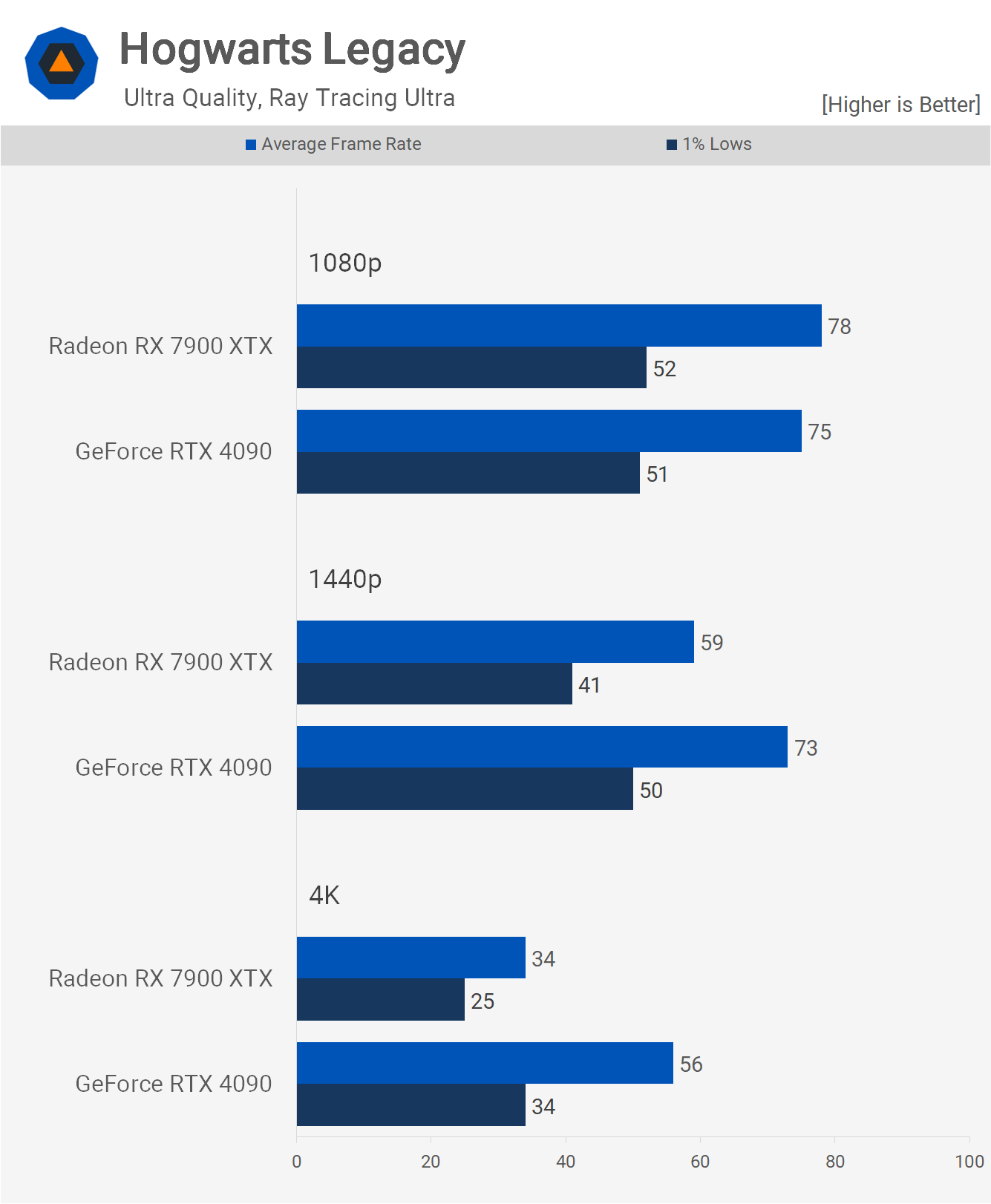

Hogwarts Legacy is another new game that's heavily CPU-limited, even when using the best gaming CPUs. As we've seen a few times now, when CPU-limited, the 7900 XTX can outperform the RTX 4090, and we're seeing that at 1080p and 1440p, while the 4K data is still competitive.

Enabling ray tracing reduces the 7900 XTX to similar levels of performance as the RTX 4090, as both are CPU-limited here. Then at 1440p, it becomes 19% slower and a significant 39% slower at 4K.

Enabling upscaling helps both GPUs, especially at 4K, and now the 7900 XTX is just shy of 60 fps, making it 19% slower than the 4090.

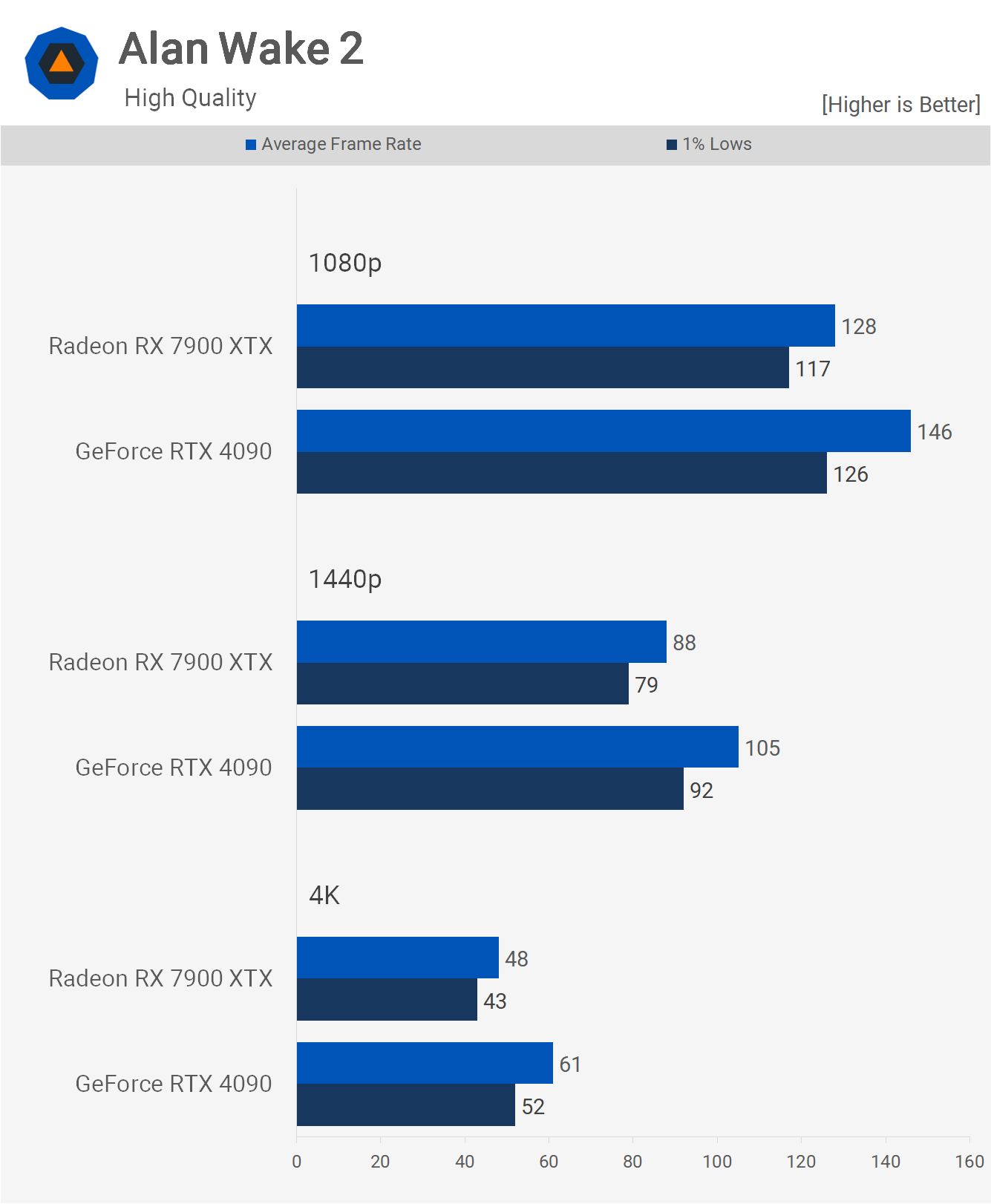

Now one of the newest games added to our list is Alan Wake 2, and here the Radeon GPU is surprisingly competitive. At 1080p, it was just 12% slower, but we're also seeing only a 16% margin at 1440p and then 21% at 4K, which are reasonable margins, considering the 7900 XTX costs almost 40% less at MSRP.

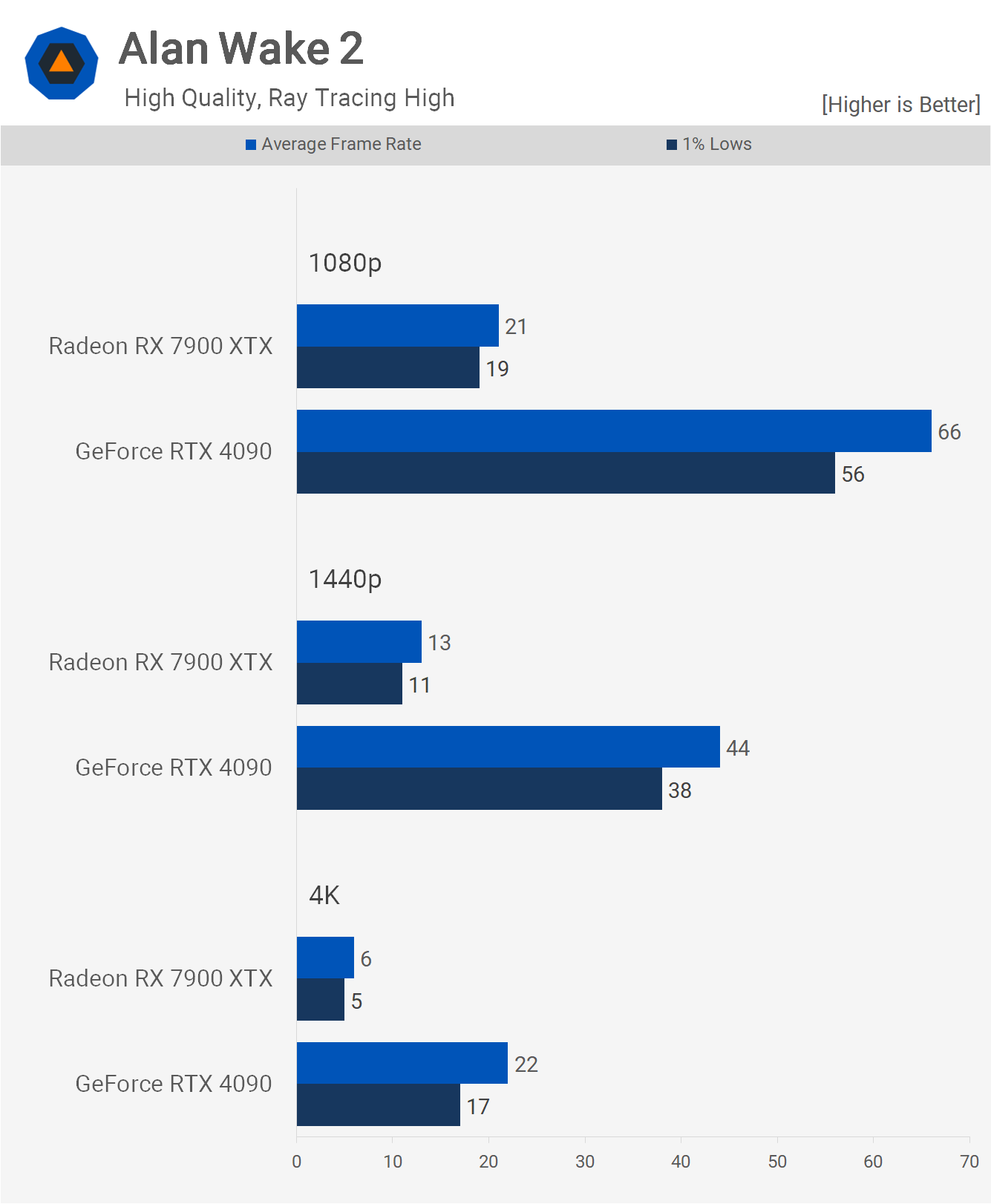

The problem for the Radeon GPU becomes apparent when enabling ray tracing. Here, it significantly lags behind, delivering unplayable performance with a mere 21 fps at 1080p. The 4090 isn't stellar here either, with just 66 fps at 1080p and 44 fps at 1440p, but it's considerably better than the XTX.

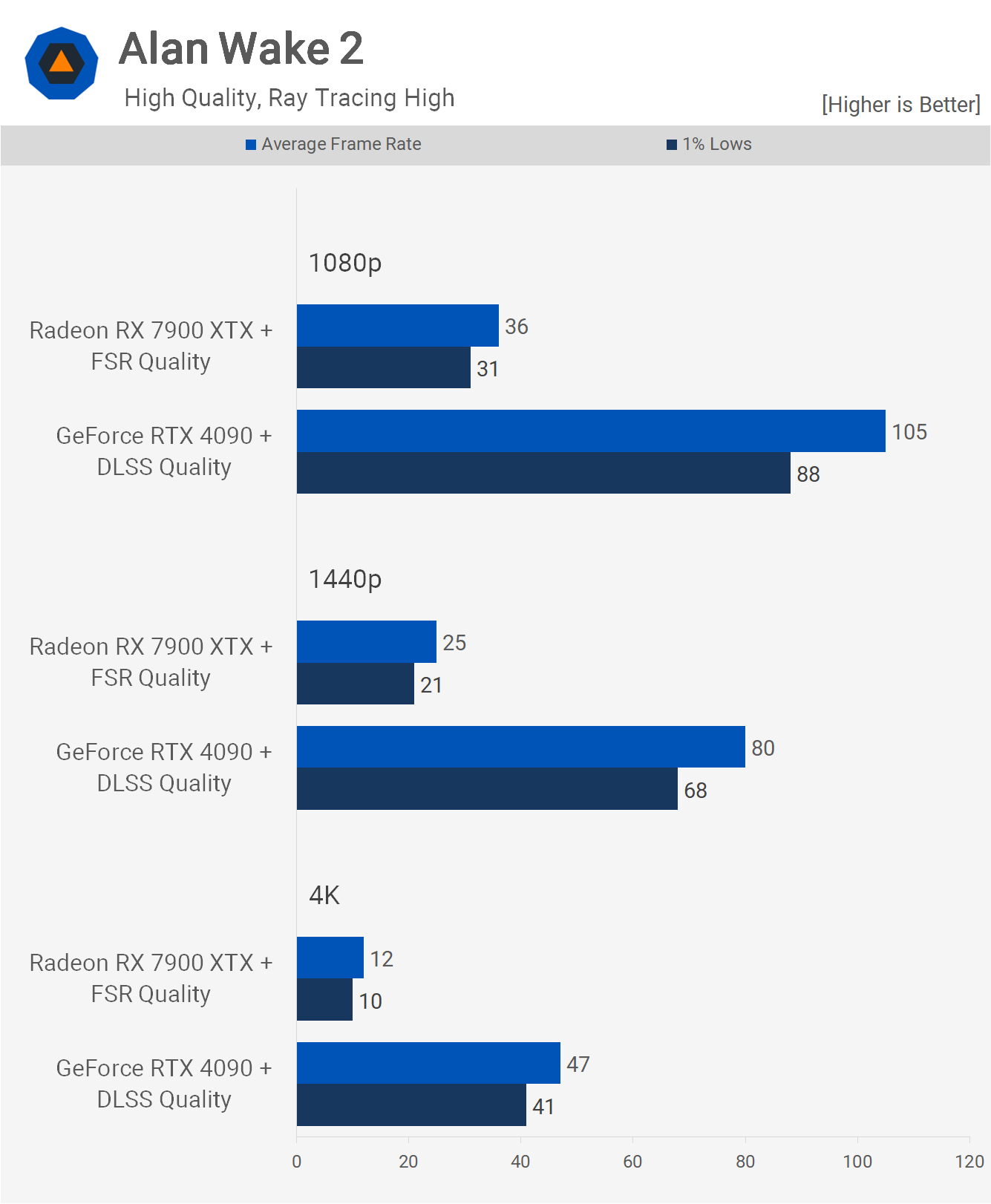

Of course, enabling upscaling helps the 4090 achieve more respectable frame rates at 1080p and 1440p, though it does little to assist the 7900 XTX.

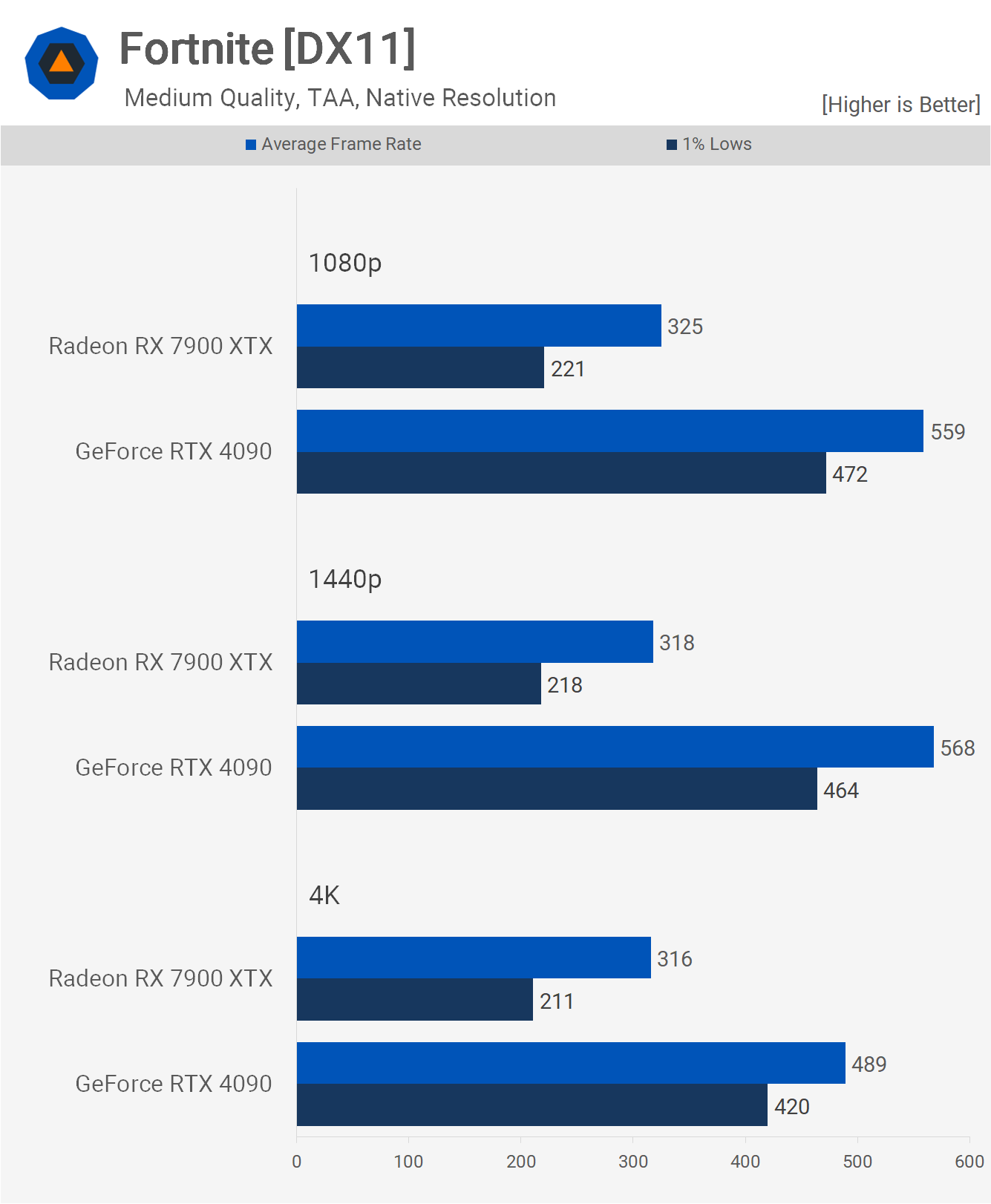

Next, we have Fortnite using the epic quality settings in DX11 mode, and here the 7900 XTX is around 30% slower than the RTX 4090 at each tested resolution, which is not outstanding but not terrible either.

However, if we opt for the more competitive medium quality settings, we find that at 1080p and 1440p, the XTX is over 40% slower, which is not ideal for those seeking every competitive advantage. The margin is slightly reduced to 35% at 4K, but even so, that meant that the GeForce GPU was significantly faster.

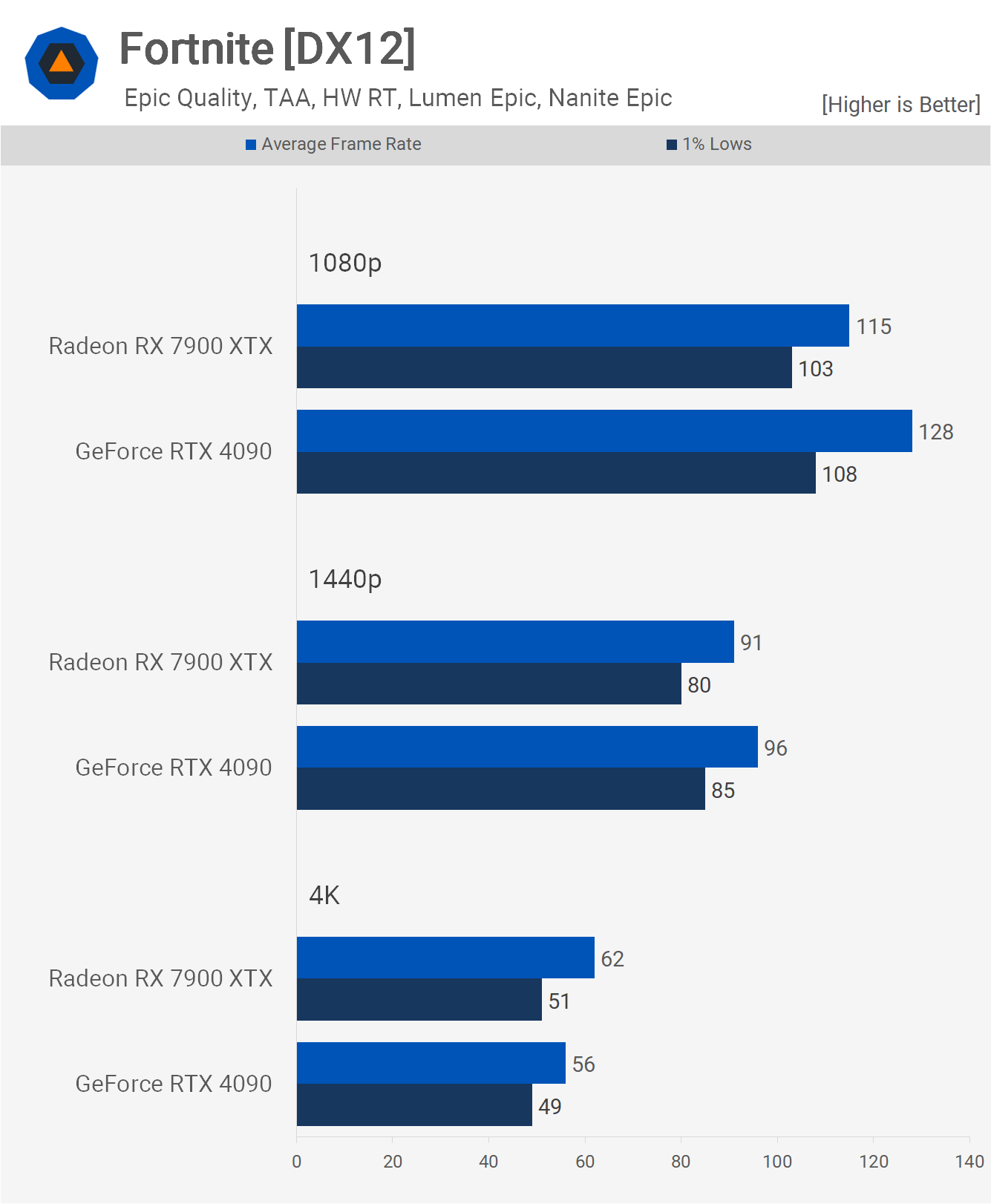

Oddly, if we switch to DX12 using the epic preset with ray tracing enabled, the Radeon GPU is shockingly competitive and even faster at 4K. We triple-checked these results and obtained the same data each time.

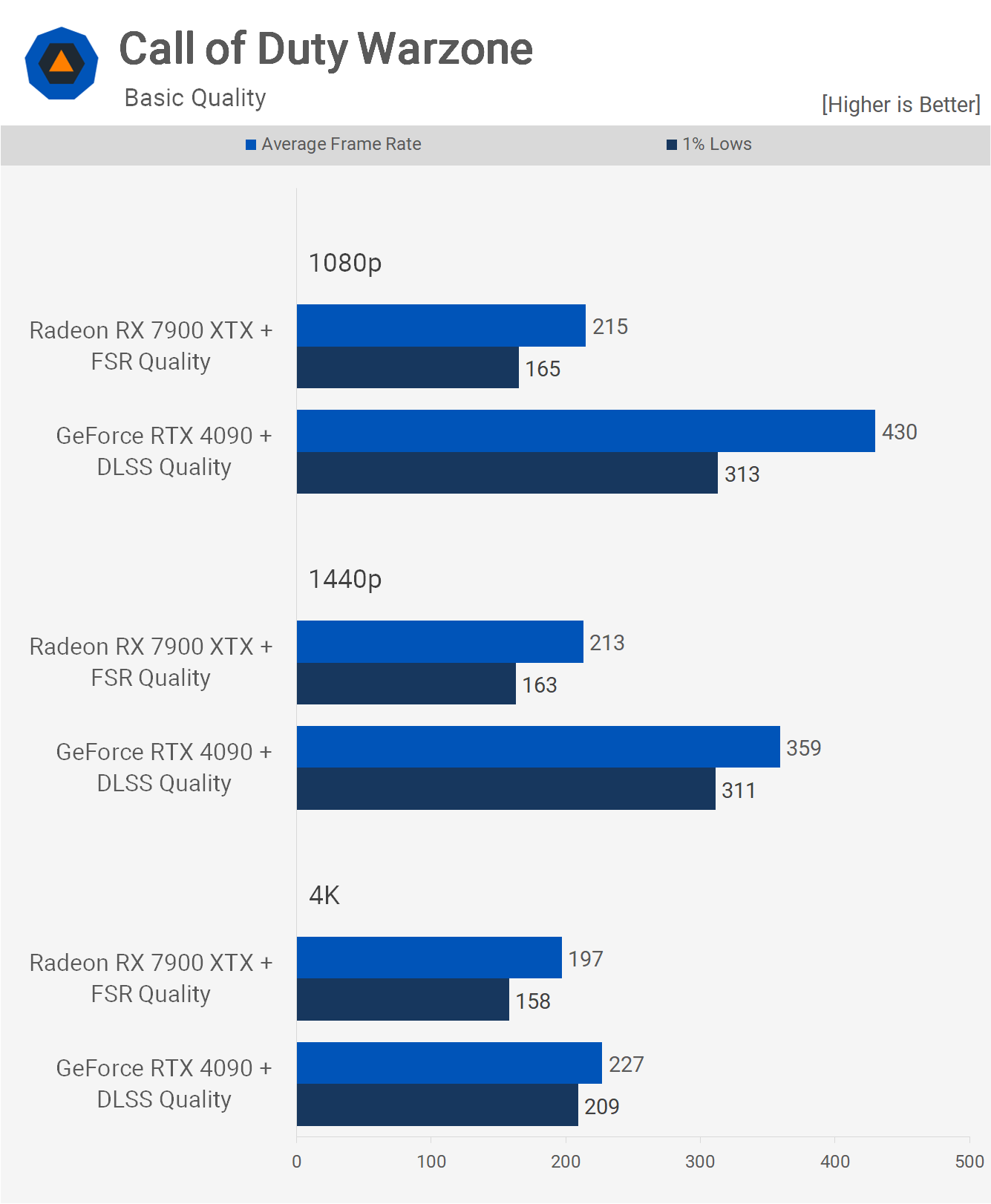

Like Fortnite, Warzone highlights an issue for Radeon GPUs when using competitive quality settings. For an unknown reason, the section of the map used for testing limited the 7900 XTX to around 200 fps, and this was observed regardless of the resolution used, which typically suggests a strong CPU bottleneck.

However, the RTX 4090 performed significantly better, with over 400 fps at 1080p and over 300 fps at 1440p, making it 63% faster. Strangely, they're very similar at 4K, so the limitation for the Radeon GPU at lower resolutions is unclear, perhaps related to a driver issue. For those seeking maximum fps in Warzone, a GeForce GPU seems to be the preferable choice for now.

Also, enabling upscaling did little for either GPU using the basic quality preset, so running at the native resolution is probably better, especially for those gaming at 1080p.

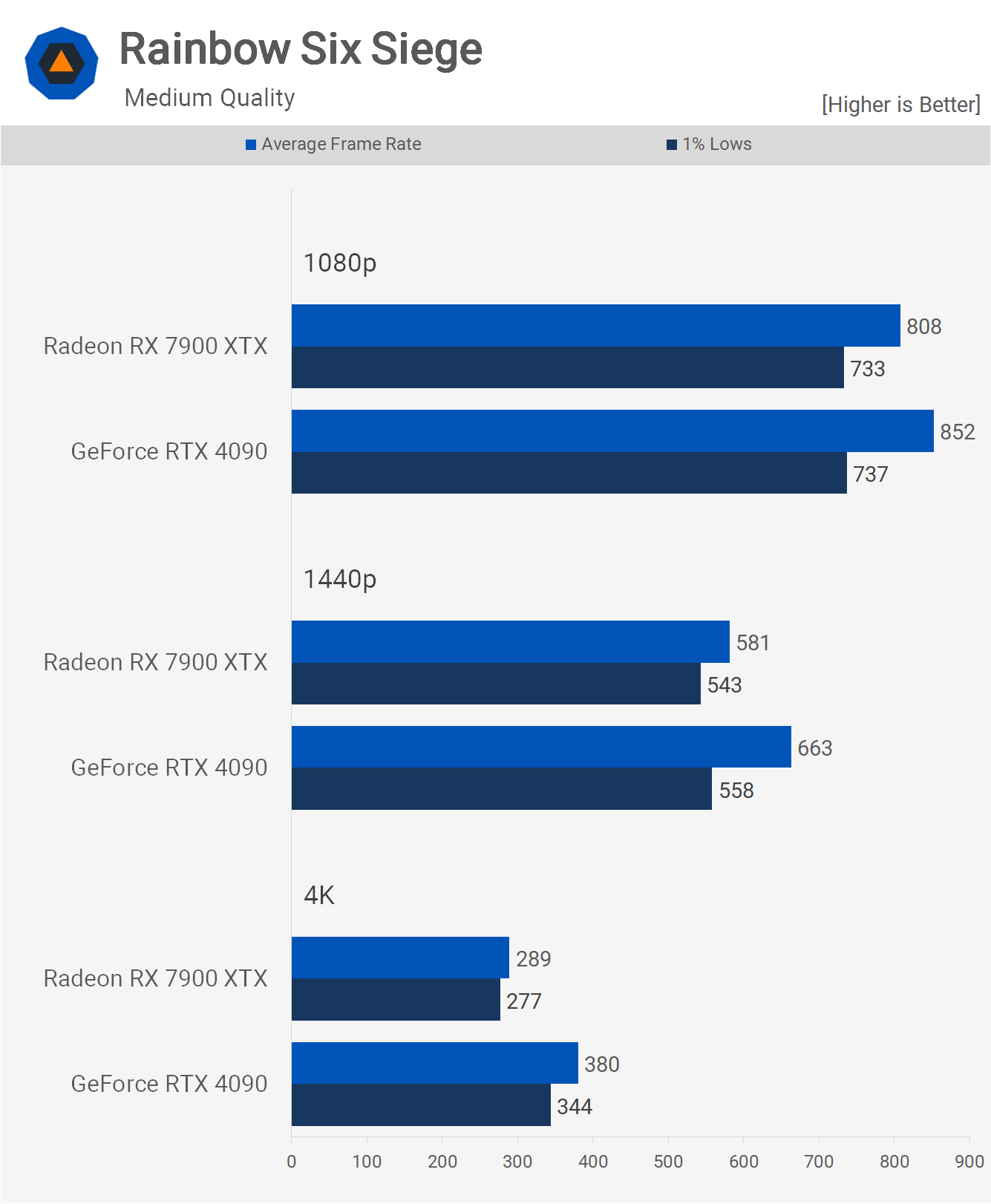

Unlike Fortnite and Warzone, performance in Rainbow Six Siege is much more competitive. The 7900 XTX was just 5% slower at 1080p, 12% slower at 1440p, and then 24% slower at 4K. In terms of value for money, the Radeon GPU performs really well here, enabling extremely high frame rates at resolutions such as 1440p.

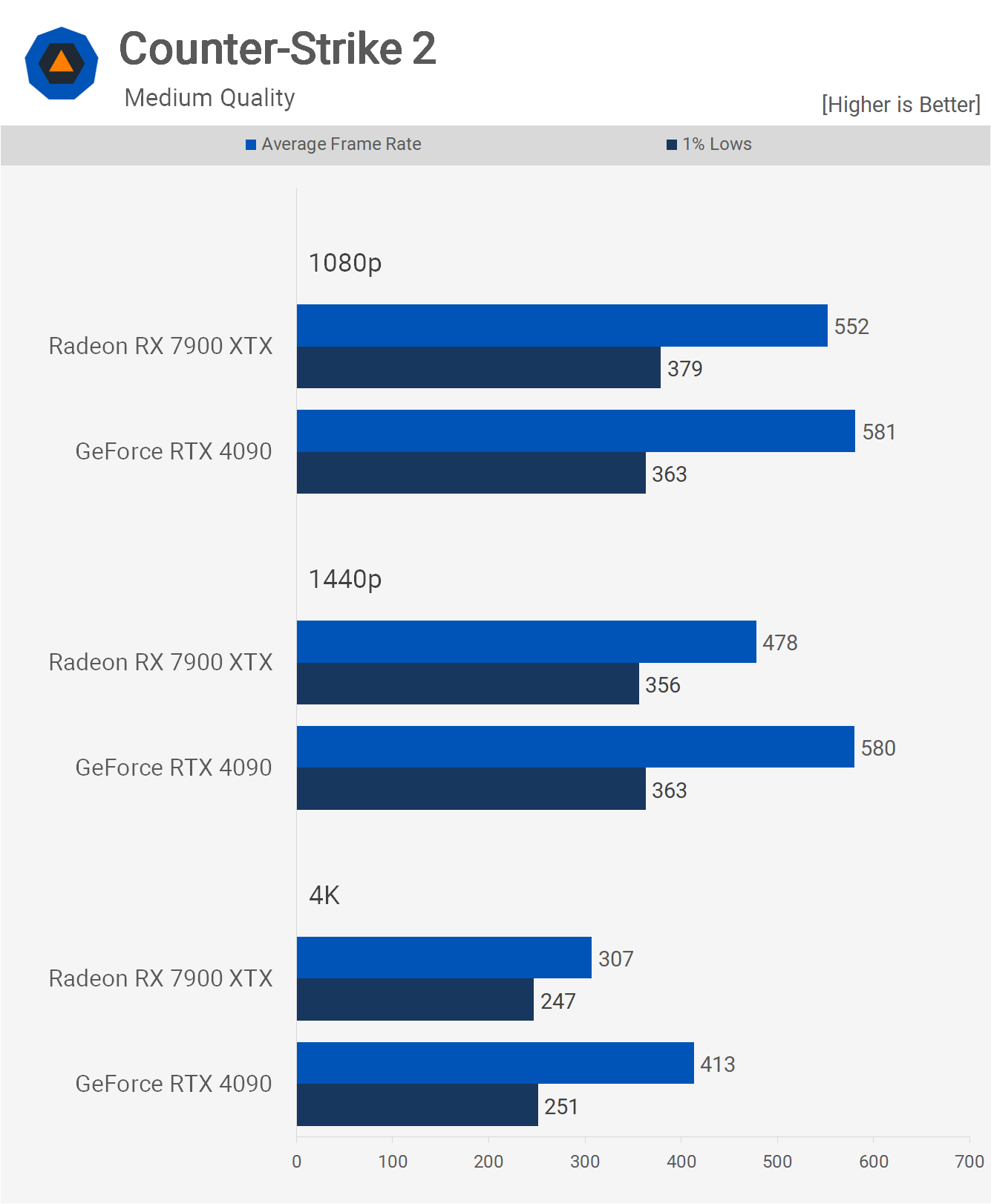

Finally, we have Counter-Strike 2 using the medium preset, and again the 7900 XTX performs quite well here, delivering comparable performance at 1080p. However, the RTX 4090 is CPU-limited at this resolution, but both GPUs average over 500 fps. Then at 1440p, the XTX was 18% slower than a CPU-limited 4090, and 26% slower at 4K. Overall, these are good results.

Performance Summary

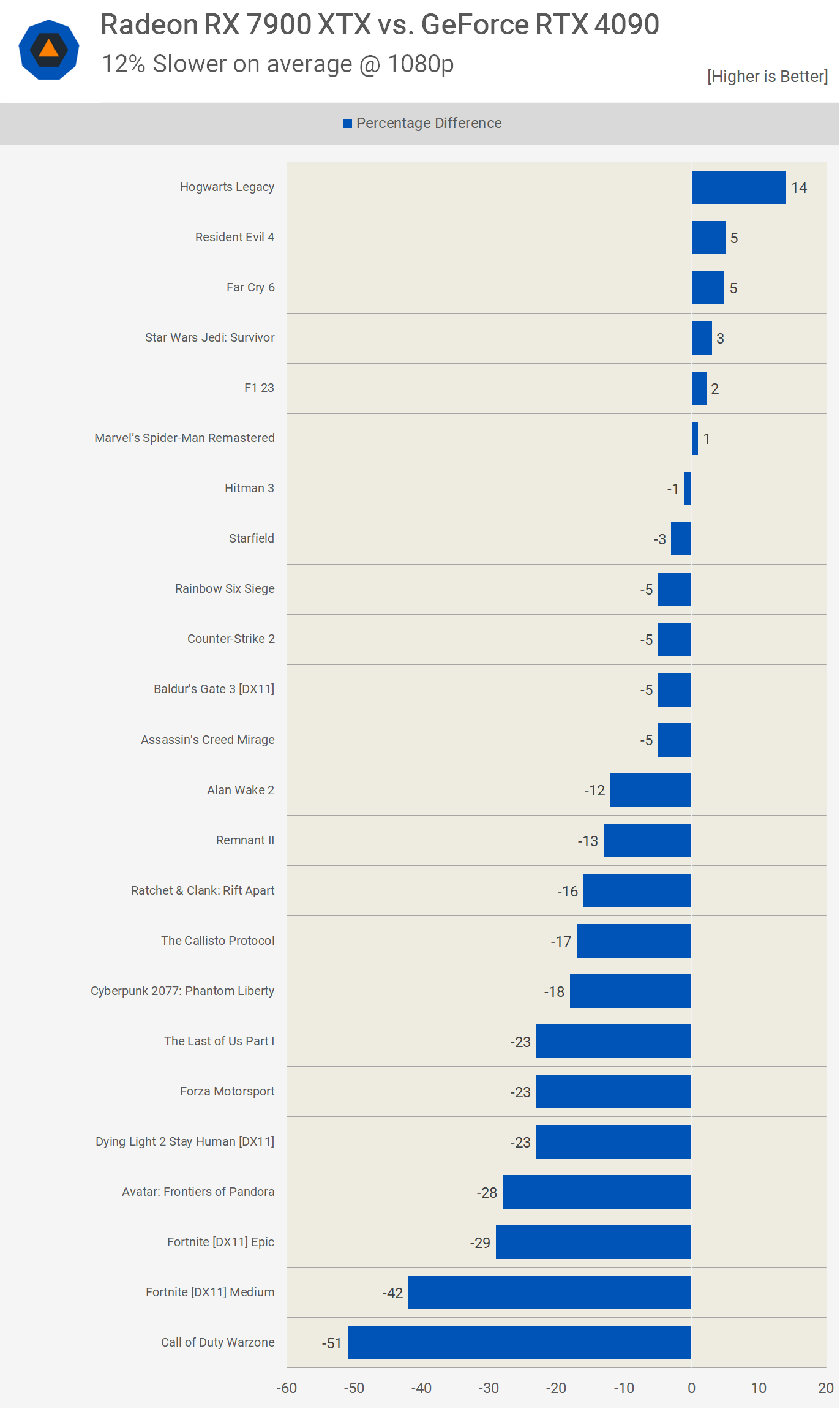

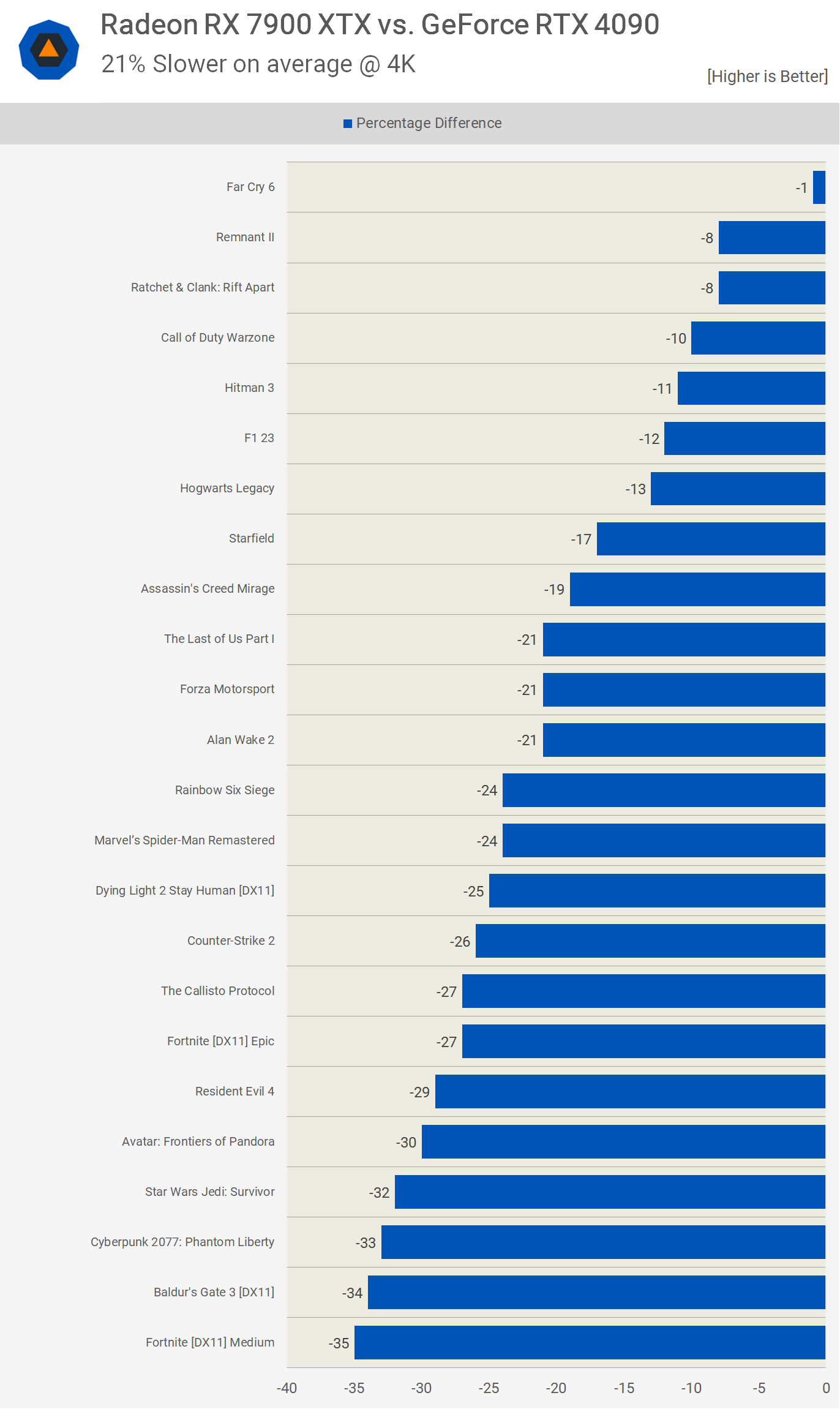

Here's a look at the performance at 1080p, excluding any ray tracing or upscaling (DLSS/FSR) results. On average, the 7900 XTX was 12% slower than the RTX 4090, which is not significantly different from our day one 7900 XTX review data, where the Radeon GPU trailed by a 5% margin. The main difference being that we were using the 5800X3D, whereas now we're using the 7800X3D.

Increasing the resolution to 1440p allows the RTX 4090 to extend its lead, with the 7900 XTX now seen to be 18% slower on average. Although we observed margins as large as 44%, it's still a solid performance overall for the Radeon GPU.

At 4K, the margin increases to 21%, which is favorable for AMD, considering the 7900 XTX is 40% cheaper at MSRP. This margin aligns with our day-one review data using the slower 5800X3D, where the 7900 XTX was, on average, 20% slower.

Ray Tracing Performance

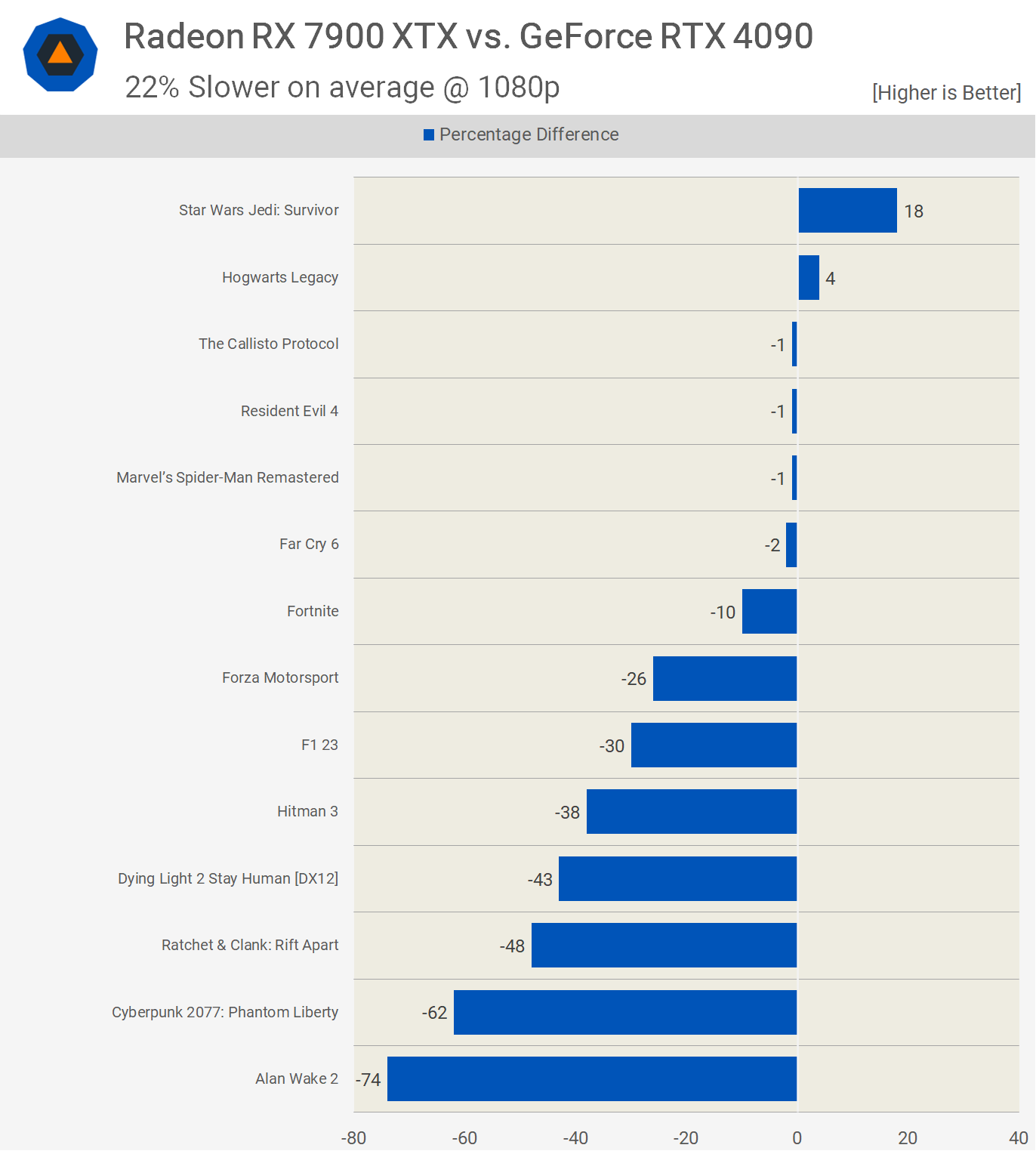

The significant issue for AMD remains its ray tracing performance, evident in the 1080p results where the 7900 XTX is already 22% slower, with several results showing margins greater than 40%.

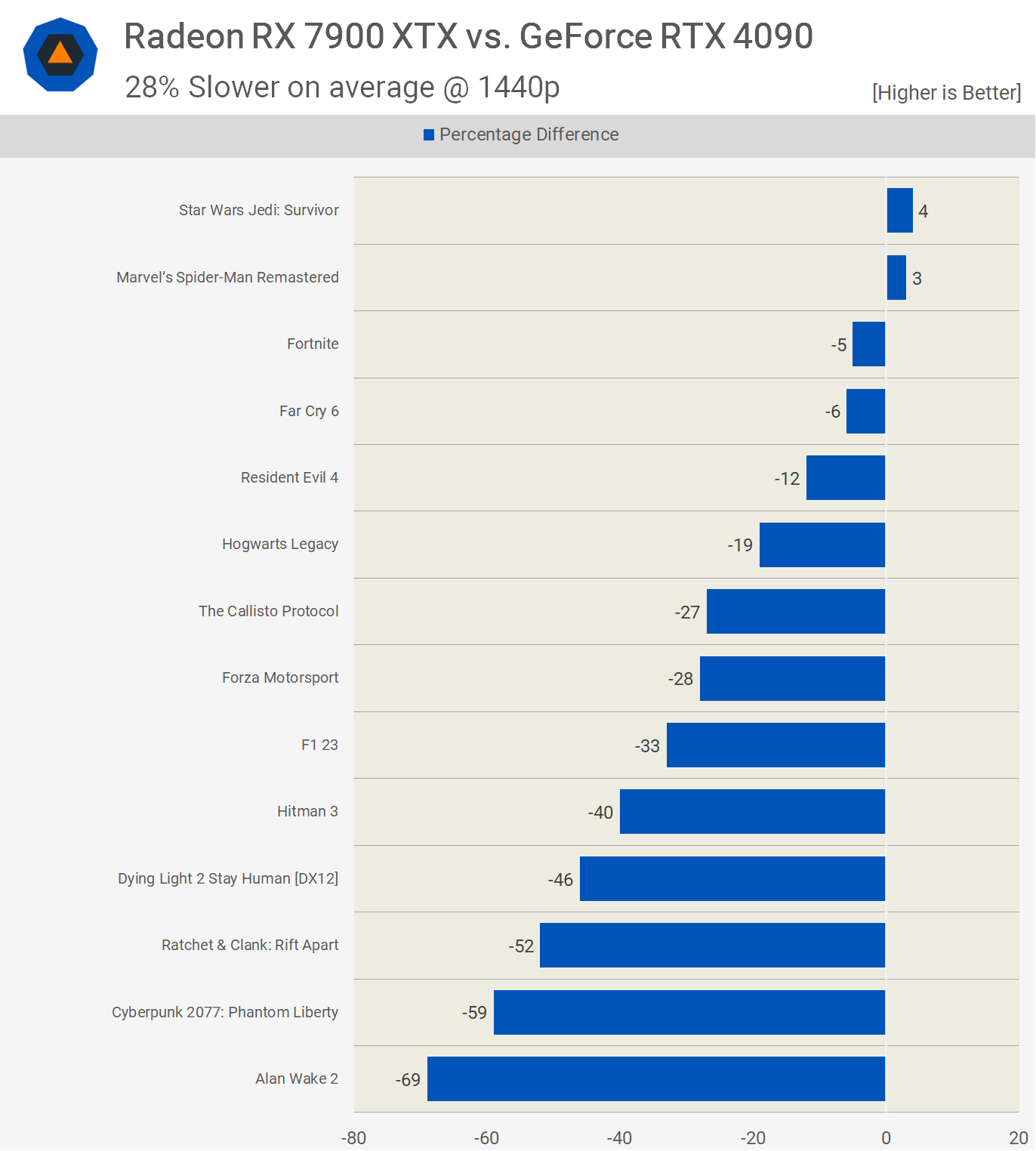

The situation worsens at 1440p, where the 7900 XTX is 28% slower, with several examples like Dying Light 2, Ratchet and Clank, Cyberpunk, and Alan Wake 2 where the Radeon GPU falls significantly behind.

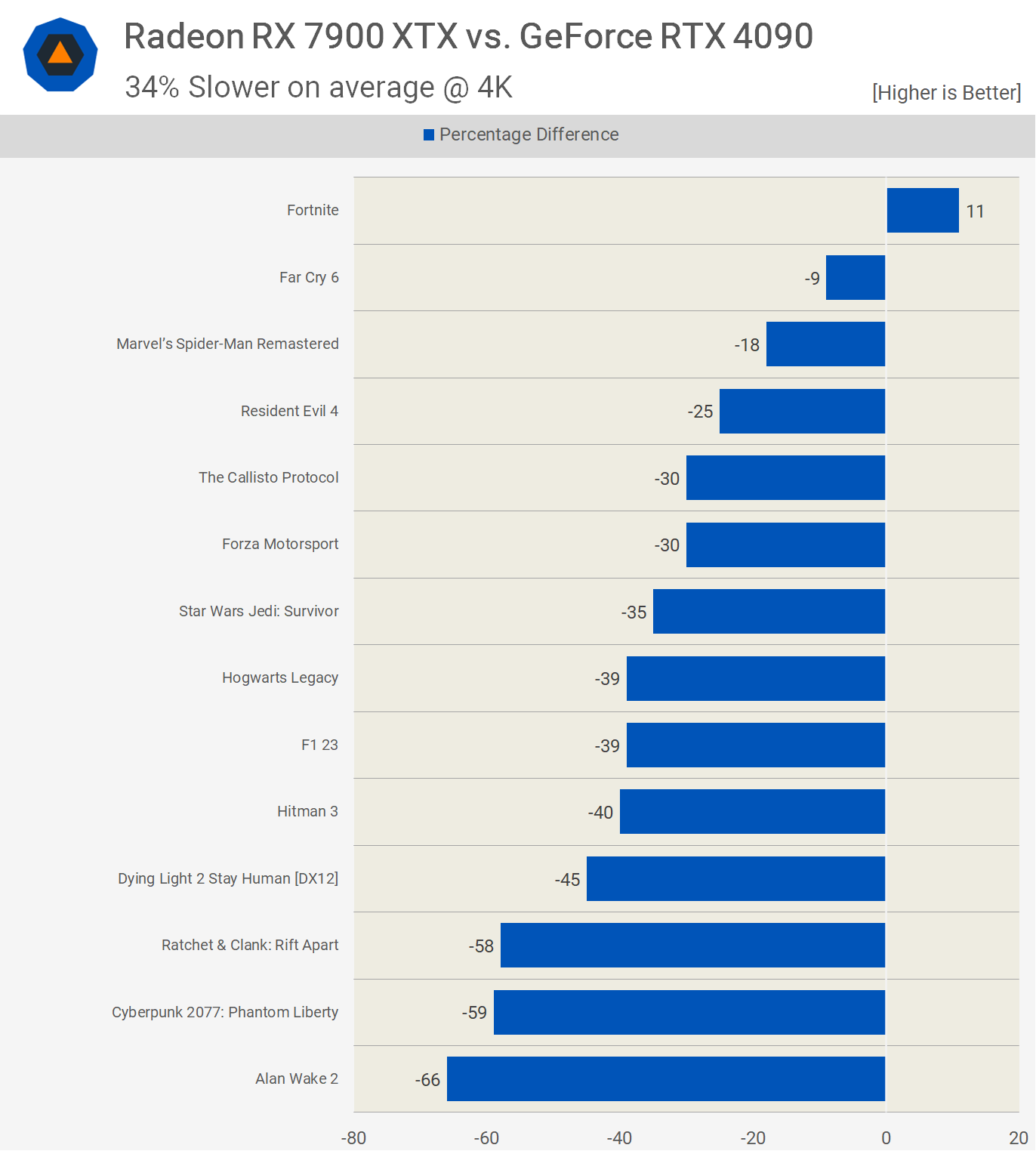

Even the RTX 4090 wasn't always practical at 4K with ray tracing enabled, so in many instances, these margins are somewhat irrelevant, but still, here the 7900 XTX was 34% slower.

Ray Tracing + Upscaling Performance

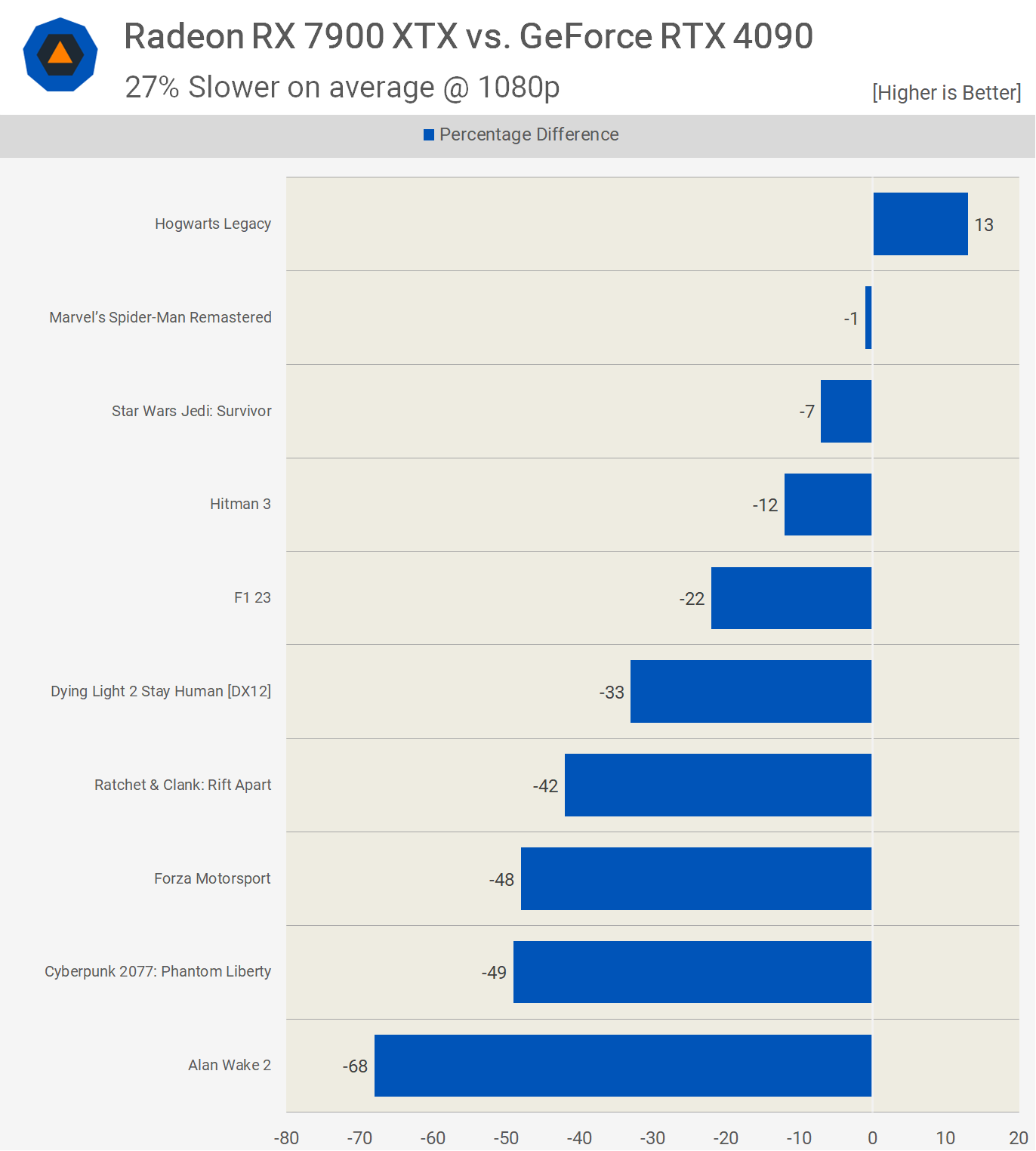

In games where we could test with ray tracing and FSR or DLSS upscaling, at 1080p, the 7900 XTX was on average 27% slower, indicating a reasonable performance advantage for the RTX 4090, even at this lower resolution.

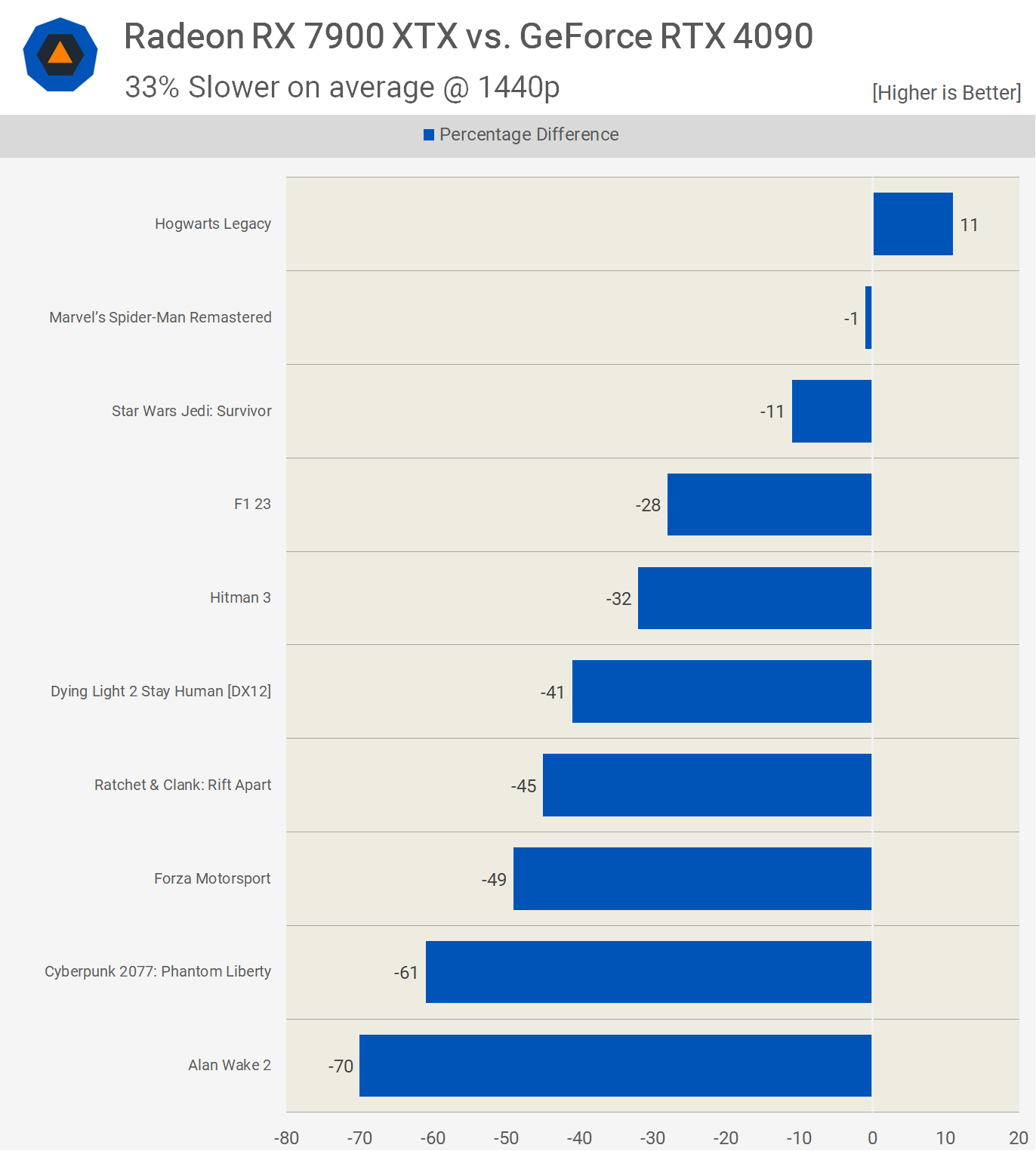

The margin increases in favor of the GeForce GPUs at 1440p, where the 7900 XTX was on average 33% slower. Again, we're observing significant losses for the Radeon GPU in titles like Ratchet & Clank, Forza Motorsport, Cyberpunk, and Alan Wake 2.

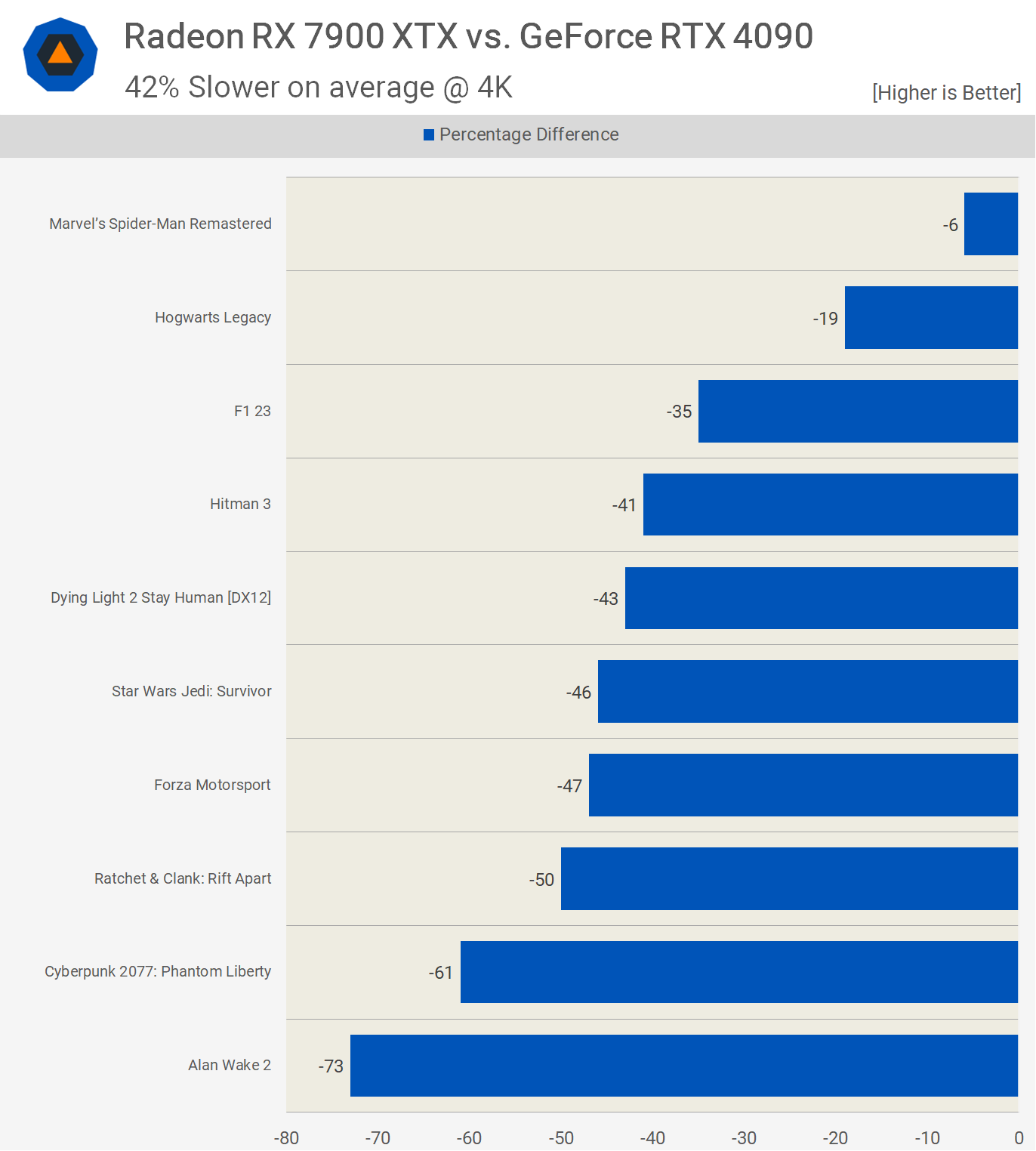

Finally, at 4K, the Radeon GPU was on average 42% slower, which is not a strong result for AMD and makes the RTX 4090 appear relatively good value in comparison to what the 7900 XTX achieved.

Would you buy the 7900 XTX or the RTX 4090?

After all that testing and performance comparisons, which GPU would you buy? Or in other words, are you spending $1,000 or $2,000? As we noted at the start of this review, despite being flagship products from competing companies, they're not exactly competing products.

If you're willing to spend $1,600 – or right now it would have to be $2,000 – to acquire the GeForce RTX 4090, you're unlikely to even consider the 7900 XTX. Likewise, if you're considering the 7900 XTX, it's extremely unlikely that you'd be choosing between it and the RTX 4090. Instead, you'd be more likely considering possibly stepping up to the RTX 4080.

So in a sense, it's a bit of a pointless comparison, but we knew this going into it. Still, it's nice to have an updated look at AMD and Nvidia's best offerings.

Essentially, if you want the best of the best, it's the RTX 4090, which appeals to those with ample budgets. The 7900 XTX, on the other hand, really has to earn your money. While it might be 18% cheaper than the RTX 4080 for similar overall performance, its ray tracing performance is often much worse, and its feature set can be lacking, either in support or quality.

Still, at ~20% cheaper, the 7900 XTX becomes more appealing, especially if you're not too concerned about RT performance, which currently we're still not, though it's nice to have if you don't have to pay a significant premium.

As it turns out, the Radeon 7900 XTX isn't the hypothetical GeForce GTX 4090, as its rasterization performance isn't fast enough. However, it's better in that its ray tracing performance is generally acceptable for those prioritizing visuals over frame rate.

A significant problem for AMD is Radeon performance in popular competitive titles such as Fortnite and Warzone. If you increase the visual quality settings, the Radeon GPUs are competitive in those titles, especially Fortnite when using DX12. But DX12 isn't the best way to play Fortnite competitively, nor are high-quality visuals. This gives Nvidia an advantage, which is why most Warzone and Fortnite players prefer and use GeForce GPUs.

I've played a lot of Fortnite with a Radeon GPU, and the experience can range from great to downright horrible. New game updates often disrupt driver optimization, causing frequent stuttering that makes the game unenjoyable, and this can persist for weeks or months until AMD addresses it. Using DX12 can help, but performance is generally weaker, and you'll likely encounter occasional stuttering. This is why the performance mode is based on the DX11 API, and it's what every pro player uses.

Ultimately, much will depend on the games you play. Our in-game testing for Counter-Strike 2 was very positive for the 7900 XTX, and we saw much the same in Rainbow Six Siege. So it can be a great GPU for esports titles. As always, make sure you research performance for the games you play the most.

Shopping Shortcuts:

- AMD Radeon RX 7900 XTX on Amazon

- Nvidia GeForce RTX 4090 on Amazon

- Nvidia GeForce RTX 4080 on Amazon

- AMD Radeon RX 7900 XT on Amazon

- Nvidia GeForce RTX 4070 on Amazon

- AMD Radeon RX 7800 XT on Amazon

- Nvidia GeForce RTX 4060 Ti on Amazon

- Nvidia GeForce RTX 4070 Ti on Amazon

- AMD Radeon RX 7600 on Amazon